- #1

jinksys

- 123

- 0

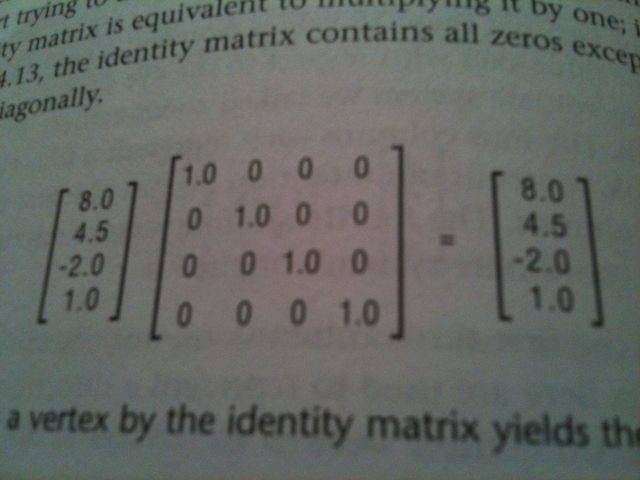

I am reading OpenGL SuperBible 5th Edition and noticed something off.

I just took linear algebra and was always told to make sure that the rows and columns match when multiplying two matrices. The vector should be on the right side of the matrix, correct?

I just took linear algebra and was always told to make sure that the rows and columns match when multiplying two matrices. The vector should be on the right side of the matrix, correct?