- #1

- 1,647

- 9

I've been aware of this work for a couple of months, and it has at last got through the review processes and has been accepted for publication.

I'm pleased to see this published, as I think it is a useful constraint on some excessively scary estimates that are not particularly well founded -- in the author's view.

The abstract

Here is the authors' own abstract:

The notion of a climate sensitivity of as high as six degrees is a catastrophic prospect, and it is sometimes portrayed as a realistic low likelihood worst case scenario. This paper is more encouraging, suggesting that non-negligible likelihoods for such high sensitivity are not well founded.

Background: what is sensitivity

Climate sensitivity is a measure of how much Earth's temperature changes in response to an imbalance in energy, called a forcing. Forcings can arise for all kinds of reasons; changes to the Earth's albedo, to the composition of the atmosphere, or to the solar input.

If everything about the makeup of the Earth remained unchanged, other than temperature, then it's comparatively straightforward to estimate how much temperatures increase for a given forcing. This turns out to be of the order of 0.3 degrees per W/m2 of forcing, and is sometimes called the "Planck response". That is, if you can deliver about 1 Watt of extra energy per square meter over all the Earth, and prevent anything other that temperature changing in response, then temperatures would rise about 0.3 degrees.

However, the Earth does change in all kinds of ways. As temperatures alter, so does humidity, cloud, surface cover, vegetation, and lots more besides. These can all have further knock-on effects, to give a boost or a damping to the temperature changes. The amount of temperature change in reality, taking all these factors into account, is called sensitivity.

Sensitivity is one of the great unknowns of climate science. Most methods of estimating sensitivity obtain values in the range of 0.5 to 1.2 degrees per unit forcing. Frequently, forcing is given in degrees per doubling of CO2, since that is a simple and quite well understood benchmark forcing; although note that sensitivity is a general feature of climate, which bears upon any forcing whether CO2 is involved or not.

Using 2xCO2 as the unit for a forcing, climate sensitivity estimates tend to be around 2 to 4.5 degrees per 2xCO2, with the possibility of values above or below this range. A wider range of 1.5 to 6 degrees is sometimes quoted as conceivable.

The long tail of uncertainty?

Nearly all the evidence indicates that the net sensitivity is greater than the Planck response. That is, the complexities of the climate system tend to amplify the response of the planet somewhat. The specific evidence for various sensitivity estimates is another topic I plan to take up sometime.

The processes that give this amplification or damping are called climate feedbacks, because they arise as a result of a temperature change, and then give a further contribution to the energy balance and so feedback into temperature again.

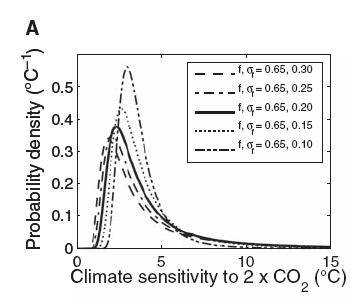

It is a feature of this amplification effect that you get distributions with a long tail. That is, if you give a simple uniform range of feedback factors, as a map from a temperature change to the feedback driven energy imbalance you get a probability distribution on the total sensitivity which has a certain peak value, and then a long tail off to the right, towards higher sensitivity, but a fairly sudden cutoff to the left, for low sensitivity.

A widely cited paper on this feature of sensitivity estimation is

Here's a picture of a likelihood distribution from that paper, showing the long tail:

Based on these kinds of analysis, it is widely reported that there's a likelihood, or probability, of about 5%, that climate sensitivity could be as high as 6 degrees per 2xCO2. That's widely seen as a catastrophic outlook; a worst case scenario that should be considered as a realistic risk.

What is probability, in this context?

There's a real philosophical problem here in identifying what probability even means. It's hard to make sense of "probability" here in terms of frequencies of outcomes for a repeated event.

The paper specifies at the outset that they are using "the standard Bayesian paradigm of probability as the subjective degree of belief of the researcher in a proposition". To give a likelihood in this way, it is necessary to take into account a "prior belief" for the probability distribution, which is then modified in the light of empirical evidence. This is necessarily subjective, in the selection of the "prior" to be used in an analysis.

The paper is focused upon the issue of choosing priors, and shows that non-negligible probabilities for extremely high sensitivities follow from use of a "uniform" prior that is taken as a way of representing "ignorance". The paper argues that this is not a sensible choice and are in fact quite extreme.

Conclusions

The IPCC 4AR gives bounds on climate sensitivity as "likely" to be in the range 2 to 4.5 degrees per 2xCO2. The language is intended to reflect 67% confidence. These estimates occur along with the comment that "values substantially higher than 4.5°C still cannot be excluded".

In their conclusion, Annan and Hargreaves suggest: "Thus it might be reasonable for the IPCC to upgrade their confidence in S lying below 4.5oC to the “extremely likely” level, indicating 95% probability of a lower value."

The paper also considers the economic implications, for planners taking into account the cost and likelihood of these worst case scenarios.

All in all, in my opinion this is a welcome general contribution to the wide open question of climate sensitivity. The most likely sensitivity values remain around about 3 degrees or a bit less taking into account some recent work on the Earth Radiation Budget Experiment; with a substantial spread of uncertainty. But this paper argues it is reasonable to consider the very large sensitivity values sometimes proposed to be extremely unlikely.

Cheers -- sylas

- Annan, J.D., and Hargreaves, J.C. (2009) On the generation and interpretation of probabilistic estimates of climate sensitivity, to appear in Climatic Change. (http://www.jamstec.go.jp/frcgc/research/d5/jdannan/probrevised.pdf ).

I'm pleased to see this published, as I think it is a useful constraint on some excessively scary estimates that are not particularly well founded -- in the author's view.

The abstract

Here is the authors' own abstract:

The equilibrium climate response to anthropogenic forcing has long been one of the dominant, and therefore most intensively studied uncertainties, in predicting future climate change. As a result, many probabilistic estimates of the climate sensitivity (S) have been presented. In recent years, most of them have assigned significant probability to extremely high sensitivity, such as P(S > 6oC) > 5%.

In this paper, we investigate some of the assumptions underlying these estimates. We show that the popular choice of a uniform prior has unacceptable properties and cannot be reasonably considered to generate meaningful and usable results. When instead reasonable assumptions are made, much greater confidence in a moderate value for S is easily justified, with an upper 95% probability limit for S easily shown to lie close to 4oC, and certainly well below 6oC. These results also impact strongly on projected economic losses due to climate change.

In this paper, we investigate some of the assumptions underlying these estimates. We show that the popular choice of a uniform prior has unacceptable properties and cannot be reasonably considered to generate meaningful and usable results. When instead reasonable assumptions are made, much greater confidence in a moderate value for S is easily justified, with an upper 95% probability limit for S easily shown to lie close to 4oC, and certainly well below 6oC. These results also impact strongly on projected economic losses due to climate change.

The notion of a climate sensitivity of as high as six degrees is a catastrophic prospect, and it is sometimes portrayed as a realistic low likelihood worst case scenario. This paper is more encouraging, suggesting that non-negligible likelihoods for such high sensitivity are not well founded.

Background: what is sensitivity

Climate sensitivity is a measure of how much Earth's temperature changes in response to an imbalance in energy, called a forcing. Forcings can arise for all kinds of reasons; changes to the Earth's albedo, to the composition of the atmosphere, or to the solar input.

If everything about the makeup of the Earth remained unchanged, other than temperature, then it's comparatively straightforward to estimate how much temperatures increase for a given forcing. This turns out to be of the order of 0.3 degrees per W/m2 of forcing, and is sometimes called the "Planck response". That is, if you can deliver about 1 Watt of extra energy per square meter over all the Earth, and prevent anything other that temperature changing in response, then temperatures would rise about 0.3 degrees.

However, the Earth does change in all kinds of ways. As temperatures alter, so does humidity, cloud, surface cover, vegetation, and lots more besides. These can all have further knock-on effects, to give a boost or a damping to the temperature changes. The amount of temperature change in reality, taking all these factors into account, is called sensitivity.

Sensitivity is one of the great unknowns of climate science. Most methods of estimating sensitivity obtain values in the range of 0.5 to 1.2 degrees per unit forcing. Frequently, forcing is given in degrees per doubling of CO2, since that is a simple and quite well understood benchmark forcing; although note that sensitivity is a general feature of climate, which bears upon any forcing whether CO2 is involved or not.

Using 2xCO2 as the unit for a forcing, climate sensitivity estimates tend to be around 2 to 4.5 degrees per 2xCO2, with the possibility of values above or below this range. A wider range of 1.5 to 6 degrees is sometimes quoted as conceivable.

The long tail of uncertainty?

Nearly all the evidence indicates that the net sensitivity is greater than the Planck response. That is, the complexities of the climate system tend to amplify the response of the planet somewhat. The specific evidence for various sensitivity estimates is another topic I plan to take up sometime.

The processes that give this amplification or damping are called climate feedbacks, because they arise as a result of a temperature change, and then give a further contribution to the energy balance and so feedback into temperature again.

It is a feature of this amplification effect that you get distributions with a long tail. That is, if you give a simple uniform range of feedback factors, as a map from a temperature change to the feedback driven energy imbalance you get a probability distribution on the total sensitivity which has a certain peak value, and then a long tail off to the right, towards higher sensitivity, but a fairly sudden cutoff to the left, for low sensitivity.

A widely cited paper on this feature of sensitivity estimation is

- Roe, G.H., and SBaker, M.B. Why Is Climate Sensitivity So Unpredictable?, in Science Vol 318, No 5850, pp 629-632, 26 October 2007, DOI: 10.1126/science.1144735

Here's a picture of a likelihood distribution from that paper, showing the long tail:

Based on these kinds of analysis, it is widely reported that there's a likelihood, or probability, of about 5%, that climate sensitivity could be as high as 6 degrees per 2xCO2. That's widely seen as a catastrophic outlook; a worst case scenario that should be considered as a realistic risk.

What is probability, in this context?

There's a real philosophical problem here in identifying what probability even means. It's hard to make sense of "probability" here in terms of frequencies of outcomes for a repeated event.

The paper specifies at the outset that they are using "the standard Bayesian paradigm of probability as the subjective degree of belief of the researcher in a proposition". To give a likelihood in this way, it is necessary to take into account a "prior belief" for the probability distribution, which is then modified in the light of empirical evidence. This is necessarily subjective, in the selection of the "prior" to be used in an analysis.

The paper is focused upon the issue of choosing priors, and shows that non-negligible probabilities for extremely high sensitivities follow from use of a "uniform" prior that is taken as a way of representing "ignorance". The paper argues that this is not a sensible choice and are in fact quite extreme.

Conclusions

The IPCC 4AR gives bounds on climate sensitivity as "likely" to be in the range 2 to 4.5 degrees per 2xCO2. The language is intended to reflect 67% confidence. These estimates occur along with the comment that "values substantially higher than 4.5°C still cannot be excluded".

In their conclusion, Annan and Hargreaves suggest: "Thus it might be reasonable for the IPCC to upgrade their confidence in S lying below 4.5oC to the “extremely likely” level, indicating 95% probability of a lower value."

The paper also considers the economic implications, for planners taking into account the cost and likelihood of these worst case scenarios.

All in all, in my opinion this is a welcome general contribution to the wide open question of climate sensitivity. The most likely sensitivity values remain around about 3 degrees or a bit less taking into account some recent work on the Earth Radiation Budget Experiment; with a substantial spread of uncertainty. But this paper argues it is reasonable to consider the very large sensitivity values sometimes proposed to be extremely unlikely.

Cheers -- sylas

Last edited by a moderator: