- #1

sponsoredwalk

- 533

- 5

I think I finally understand the wedge product & think it explains things

in 2-forms that have been puzzling me for a long time.

My post consists of the way I see things regarding the wedge product & interspersed with

my thoughts are only 3 questions (in bold!) that I'm hoping for some clarification on.

The rest of the writing is just meant to be read & hopefully it's all right, if it's wrong do

please correct me

If

v = v₁e₁ + v₂e₂

w = w₁e₁ + w₂e₂

where e₁ = (1,0) & e₂ = (0,1) then

v ⋀ w = (v₁e₁ + v₂e₂) ⋀ (w₁e₁ + w₂e₂)

_____ = v₁w₁e₁⋀e₁ + v₁w₂e₁⋀e₂ + v₂w₁e₂⋀e₁ + v₂w₂e₂⋀e₂

_____ = v₁w₂e₁⋀e₂ + v₂w₁e₂⋀e₁

v ⋀ w= (v₁w₂ - v₂w₁)e₁⋀e₂

This is interpreted as the area contained in v & w.

My first question is based on the fact that this is a two dimensional calculation

that comes out with the exact same result as the cross product of

v'_= v₁e₁ +_v₂e₂_+ 0e₃

w' = w₁e₁ + w₂e₂ + 0e₃

Also the general x ⋀ y = (x₁e₁ + x₂e₂ + xe₃) ⋀ (y₁e₁ + y₂e₂ + ye₃)

comes out with the exact same result as the cross product.

In all cases the end result is a vector orthogonal to v & w, or to v'& w',

or to x'& y'. Is this true for every wedge product calculation in every

dimension? The wedge product of two vectors in ℝ³ gives the area

of parallelogram they enclose & it can be interpreted as a scaled up factor

of a basis vector orthogonal to the vectors. So (e₁⋀e₂) is an orthogonal

unit vector to v & w & (v₁w₂ - v₂w₁) is a scalar that also gives the area

enclosed in v & w.

Judging by this you'd take the wedge product of 3 vectors in ℝ⁴ &

get the volume they enclose, and 4 vectors in ℝ⁵ gives hypervolume or

whatever. If we ended up with β(e₁⋀e₂⋀e₃) this would be in ℝ⁴ where β is

the scalar representing the volume & β(e₁⋀e₂⋀e₃) is pointing off into

the foursth dimension whatever that looks like. If all of this holds I can

justify why e₁⋀e₂ = - e₂⋀e₁ both mentally & algebraically by taking dot

products & finding those orthogonal vectors so I'd like to hear if this

makes sense in the grand scheme of things!

I really despise taking things like e₁⋀e₂ = - e₂⋀e₁ as definitions unless I

can justify them. I can algebraically justify why e₁⋀e₂ = - e₂⋀e₁ by

thinking in terms of the cross product which itself is nothing more than

clever use of the inner product of two orthogonal vectors. Therefore I

think that e₁⋀e₂ literally represents the unit vector that is orthogonal to

the vectors v & w involved in my calculation. So if there are n - 1 vectors

then e₁⋀e₂⋀...⋀en lies in ℝⁿ and is the unit vector as part of some

new vector βe₁⋀e₂⋀...⋀en that is orthogonal to (n - 1) vectors.

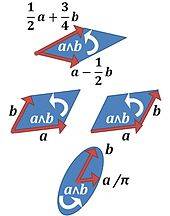

I read a comment that the wedge product is in an "exterior square" so I

guess this generalizes to products of all arity (exterior volumes et al) &

from browsing I've seen that a "bivector" is a way to interpret this, like

this:

it's a 2 dimensional vector here for example. My second question is -

if I were to just think in terms of orthogonality as I have explained

in this thread is there any deficiency? As far as I can tell this 2-D

vector in the picture is just a visual representation of the area & as it is

explained via a scaled up orthogonal vector I think there is virtually no difference.

A lot of the wiki topics on "bivectors" and forms etc... were previously

unreadible to me & are only now slowly beginning to make sense (I hope!).

And finally, I'm hoping to use this knowledge above (assuming it's right) to try to

understand terms like

Adx + Bdy + Cdz

&

Adydz + Bdzdx + Cdxdy

in this context. I've seen calulation that specifically require dxdx = dydy = dzdz = 0 &

you're supposed to remember this magic but I don't buy it as just magic, I think there are

very good reasons why this is the case. My third question arises from the fact that I think

these algebraic rules, like dxdy = -dydx & dxdx = 0 etc... are just encoding within

them rules that logically follow from everything I've explained above & would probably be

more clearly delineated through vectors, are they encoding vector calculations dealing

with orthogonality?

Perhaps someone more knowledgeable could expand upon this, I'd greatly appreciate it.

in 2-forms that have been puzzling me for a long time.

My post consists of the way I see things regarding the wedge product & interspersed with

my thoughts are only 3 questions (in bold!) that I'm hoping for some clarification on.

The rest of the writing is just meant to be read & hopefully it's all right, if it's wrong do

please correct me

If

v = v₁e₁ + v₂e₂

w = w₁e₁ + w₂e₂

where e₁ = (1,0) & e₂ = (0,1) then

v ⋀ w = (v₁e₁ + v₂e₂) ⋀ (w₁e₁ + w₂e₂)

_____ = v₁w₁e₁⋀e₁ + v₁w₂e₁⋀e₂ + v₂w₁e₂⋀e₁ + v₂w₂e₂⋀e₂

_____ = v₁w₂e₁⋀e₂ + v₂w₁e₂⋀e₁

v ⋀ w= (v₁w₂ - v₂w₁)e₁⋀e₂

This is interpreted as the area contained in v & w.

My first question is based on the fact that this is a two dimensional calculation

that comes out with the exact same result as the cross product of

v'_= v₁e₁ +_v₂e₂_+ 0e₃

w' = w₁e₁ + w₂e₂ + 0e₃

Also the general x ⋀ y = (x₁e₁ + x₂e₂ + xe₃) ⋀ (y₁e₁ + y₂e₂ + ye₃)

comes out with the exact same result as the cross product.

In all cases the end result is a vector orthogonal to v & w, or to v'& w',

or to x'& y'. Is this true for every wedge product calculation in every

dimension? The wedge product of two vectors in ℝ³ gives the area

of parallelogram they enclose & it can be interpreted as a scaled up factor

of a basis vector orthogonal to the vectors. So (e₁⋀e₂) is an orthogonal

unit vector to v & w & (v₁w₂ - v₂w₁) is a scalar that also gives the area

enclosed in v & w.

Judging by this you'd take the wedge product of 3 vectors in ℝ⁴ &

get the volume they enclose, and 4 vectors in ℝ⁵ gives hypervolume or

whatever. If we ended up with β(e₁⋀e₂⋀e₃) this would be in ℝ⁴ where β is

the scalar representing the volume & β(e₁⋀e₂⋀e₃) is pointing off into

the foursth dimension whatever that looks like. If all of this holds I can

justify why e₁⋀e₂ = - e₂⋀e₁ both mentally & algebraically by taking dot

products & finding those orthogonal vectors so I'd like to hear if this

makes sense in the grand scheme of things!

I really despise taking things like e₁⋀e₂ = - e₂⋀e₁ as definitions unless I

can justify them. I can algebraically justify why e₁⋀e₂ = - e₂⋀e₁ by

thinking in terms of the cross product which itself is nothing more than

clever use of the inner product of two orthogonal vectors. Therefore I

think that e₁⋀e₂ literally represents the unit vector that is orthogonal to

the vectors v & w involved in my calculation. So if there are n - 1 vectors

then e₁⋀e₂⋀...⋀en lies in ℝⁿ and is the unit vector as part of some

new vector βe₁⋀e₂⋀...⋀en that is orthogonal to (n - 1) vectors.

I read a comment that the wedge product is in an "exterior square" so I

guess this generalizes to products of all arity (exterior volumes et al) &

from browsing I've seen that a "bivector" is a way to interpret this, like

this:

it's a 2 dimensional vector here for example. My second question is -

if I were to just think in terms of orthogonality as I have explained

in this thread is there any deficiency? As far as I can tell this 2-D

vector in the picture is just a visual representation of the area & as it is

explained via a scaled up orthogonal vector I think there is virtually no difference.

A lot of the wiki topics on "bivectors" and forms etc... were previously

unreadible to me & are only now slowly beginning to make sense (I hope!).

And finally, I'm hoping to use this knowledge above (assuming it's right) to try to

understand terms like

Adx + Bdy + Cdz

&

Adydz + Bdzdx + Cdxdy

in this context. I've seen calulation that specifically require dxdx = dydy = dzdz = 0 &

you're supposed to remember this magic but I don't buy it as just magic, I think there are

very good reasons why this is the case. My third question arises from the fact that I think

these algebraic rules, like dxdy = -dydx & dxdx = 0 etc... are just encoding within

them rules that logically follow from everything I've explained above & would probably be

more clearly delineated through vectors, are they encoding vector calculations dealing

with orthogonality?

Perhaps someone more knowledgeable could expand upon this, I'd greatly appreciate it.

Last edited: