Discussion Overview

The discussion revolves around statistical inference, specifically focusing on likelihood functions and maximum likelihood estimators. Participants are seeking assistance with a presentation related to these concepts, particularly in the context of a problem involving statistical data analysis.

Discussion Character

- Homework-related

- Technical explanation

- Debate/contested

Main Points Raised

- TM expresses uncertainty about the topic due to missed lectures and requests help with the presentation.

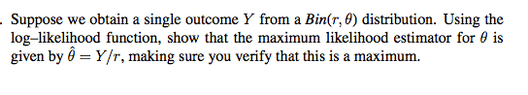

- One participant provides a likelihood function for a random variable and derives the maximum likelihood estimator, suggesting that the estimator can be expressed as the sample mean.

- Another participant presents a different likelihood function and its log-likelihood, leading to a similar conclusion about the maximum likelihood estimator.

- A later reply critiques the previous contributions, stating that they address the wrong problem despite arriving at a similar solution, emphasizing the importance of correctly interpreting the data as the number of successes from trials.

Areas of Agreement / Disagreement

Participants do not appear to reach a consensus, as there are competing interpretations of the problem and differing approaches to the likelihood functions presented.

Contextual Notes

There is a lack of clarity regarding the specific problem TM is facing, which may affect the applicability of the solutions provided. Additionally, the assumptions underlying the likelihood functions and the definitions of the variables involved are not fully explored.