monsmatglad

- 75

- 0

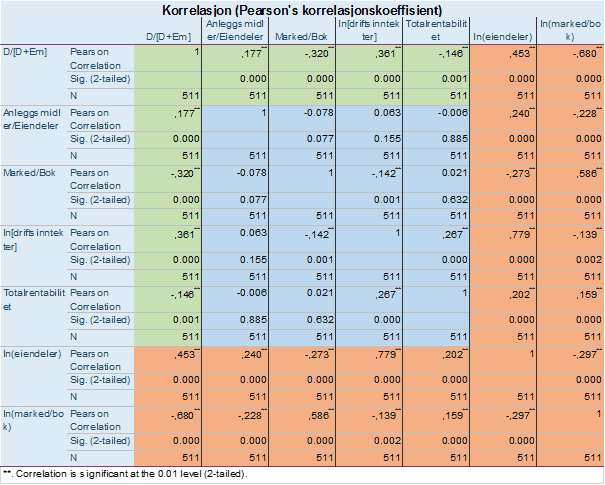

A couple of questions today. First. I am running a panel data regression test. First I check the correlations between the independent variables and the dependent variable. these are the results.

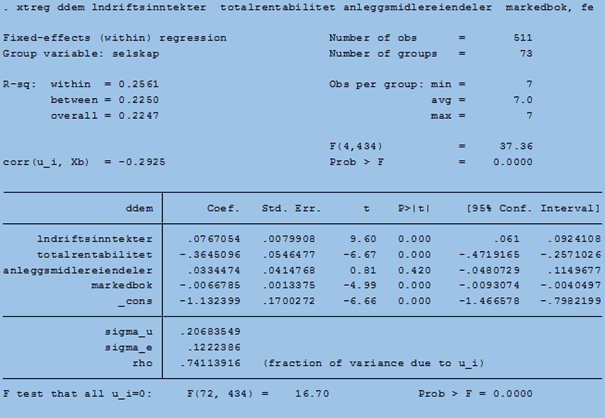

The D/(D+Em) is the dependent variable, and the independent are the 4 variables most adjacent. Disregard the two outer variables (the red area). The independent variable "Anleggsmidler/eiendeler" has a correlation coefficient (pearson's) to the dependent variable of ,177 and this is a very significant result as you can see. However, when I do the regression analysis (as shown below), the relation between "Anleggsmidler/eiendeler" and the dependent variable is not significant at all. How come the results are so different in terms of significance?

Second question is is there any command to include standardised coefficients to fixed effects regression results in stata? the beta command does not work when I use fixed effects regression (i am pretty green when it comes to Stata). Any advice on these two questions? Thanks in advance!

The D/(D+Em) is the dependent variable, and the independent are the 4 variables most adjacent. Disregard the two outer variables (the red area). The independent variable "Anleggsmidler/eiendeler" has a correlation coefficient (pearson's) to the dependent variable of ,177 and this is a very significant result as you can see. However, when I do the regression analysis (as shown below), the relation between "Anleggsmidler/eiendeler" and the dependent variable is not significant at all. How come the results are so different in terms of significance?

Second question is is there any command to include standardised coefficients to fixed effects regression results in stata? the beta command does not work when I use fixed effects regression (i am pretty green when it comes to Stata). Any advice on these two questions? Thanks in advance!