Discussion Overview

The discussion revolves around determining the necessary sample size for estimating a population mean with a specified maximum error in the context of confidence intervals. Participants are exploring the mathematical relationship between sample size and error margin, particularly focusing on how to adjust sample size to achieve a desired level of precision.

Discussion Character

- Homework-related

- Mathematical reasoning

Main Points Raised

- One participant seeks urgent help on predicting the required sample size for a given maximum error.

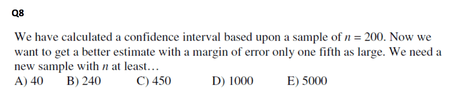

- Another participant presents the formula for maximum error in estimating a mean and asks how much to increase the sample size to reduce the error to one-fifth its original size.

- A subsequent post reiterates the question about the factor by which the sample size must increase, suggesting that it must be 5000.

- A later reply confirms that the sample size must increase by a factor of 25, explaining that since $n$ is under a radical, this adjustment is necessary to achieve the desired error margin.

Areas of Agreement / Disagreement

There is no consensus on the correct sample size, as participants are discussing the calculations and assumptions involved without reaching a definitive conclusion.

Contextual Notes

The discussion does not clarify the initial sample size or the specific values of $\sigma$ and $E$, which may affect the calculations and assumptions made by participants.