- 3,558

- 9,920

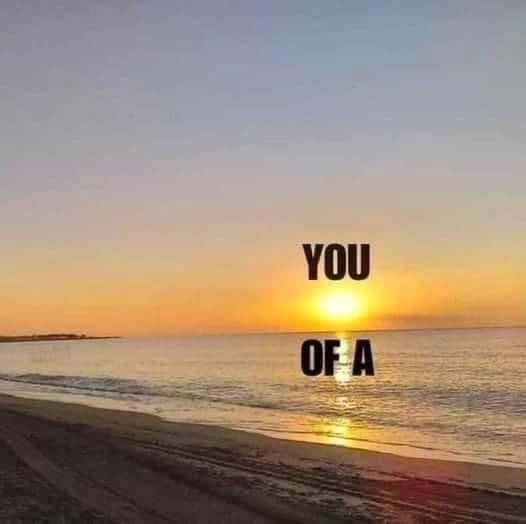

I found this image on social media today:

This image is so simple, yet out of the ordinary. You really need to stop and think about what you are looking at. Some might not even ever get it.

The question that quickly popped into my mind was: Can AI ever be able to explain what this image represents, without being fed anything else? What amazes me is how I can even do it! I failed to see how a bunch of statistical analysis can do the trick. And if it is possible, the database has to be really huge and diversified.

Have you seen other examples of seemingly simple tasks for humans that seem impossible for AI? Something that doesn't obey any known rules per se but humans can figure it out rather easily nonetheless.

The question that quickly popped into my mind was: Can AI ever be able to explain what this image represents, without being fed anything else? What amazes me is how I can even do it! I failed to see how a bunch of statistical analysis can do the trick. And if it is possible, the database has to be really huge and diversified.

Have you seen other examples of seemingly simple tasks for humans that seem impossible for AI? Something that doesn't obey any known rules per se but humans can figure it out rather easily nonetheless.