Discussion Overview

The discussion revolves around the interpretation of elementary row and column operations on matrices as a change of basis in the context of linear transformations between modules. Participants explore the implications of these operations on the representation of linear maps and the associated bases for the domain and range.

Discussion Character

- Exploratory

- Technical explanation

- Debate/contested

- Mathematical reasoning

Main Points Raised

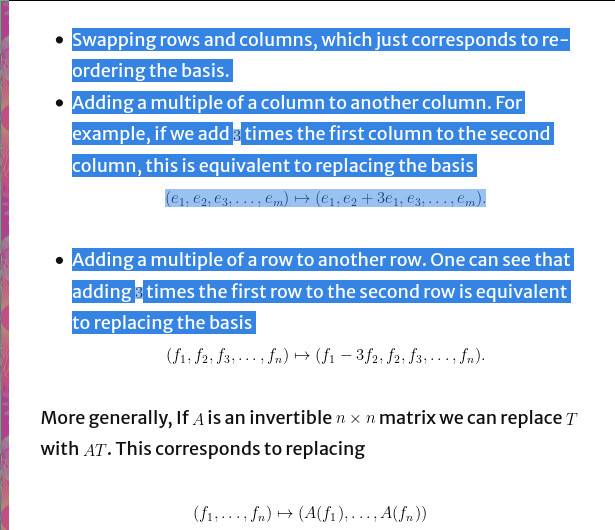

- Some participants propose that elementary row and column operations can be viewed as a change of basis, specifically in the context of linear transformations represented by matrices.

- Others argue that the term "change of basis" implies that the bases for the domain and range are already different, and that the operations change one or both of those bases.

- A participant mentions that the determinant of a linear transformation is invariant under a change of basis, suggesting that a matrix representing a linear transformation must use the same basis for both the domain and range.

- Another participant clarifies that a single row or column operation cannot be treated as a complete change of basis since it involves either pre or post multiplication, not both.

- Some participants express uncertainty about the order of operations when performing corresponding row and column operations, questioning whether it matters which operation is performed first.

- One participant provides a specific example of changing bases and questions whether it is clear how the inverse matrix affects the basis change for the range of the transformation.

- Another participant emphasizes the importance of focusing on how matrices affect vector components rather than just their rows and columns.

Areas of Agreement / Disagreement

Participants express differing views on the interpretation of elementary operations as changes of basis, with no consensus reached on whether these operations can be fully equated to a change of basis. The discussion remains unresolved regarding the implications of these operations on the representation of linear transformations.

Contextual Notes

Participants note that the determinant theorem applies only to square matrices, which may limit its relevance to the general case discussed in the blog post. Additionally, there are unresolved questions about the assumptions made regarding the bases and the specific operations performed.