hodor

- 7

- 0

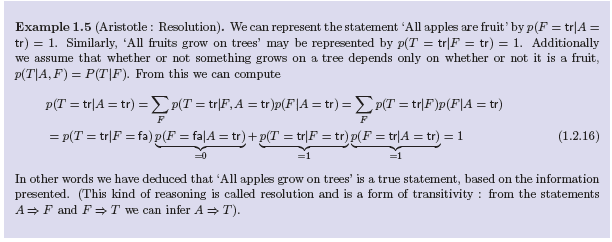

I'm just going to post a screenshot of the Example (free online textbook). I'm having a tough time making the leap to the first sum - what allows me to rewrite P(T|A) as the sum of the product of those two conditional probabilities?

Thanks

Thanks