WMDhamnekar

MHB

- 381

- 30

Hi,

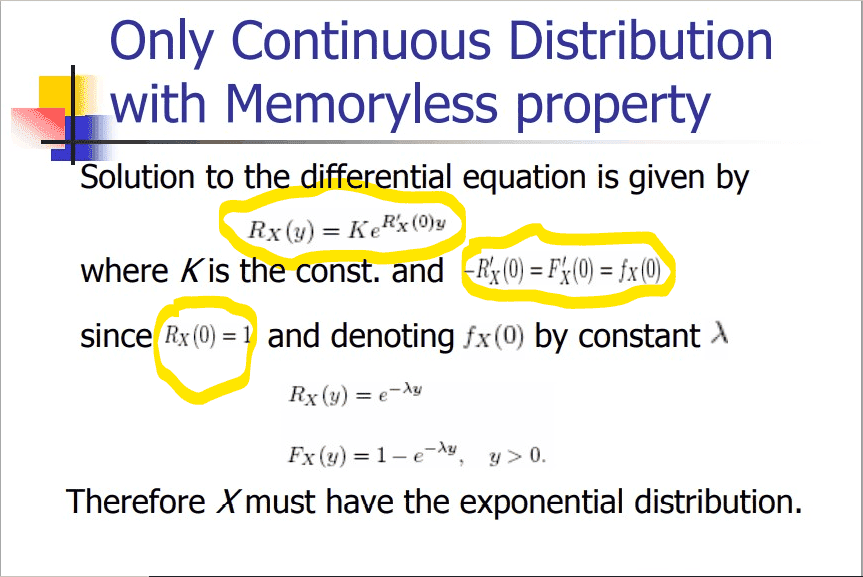

I want to know how the highlighted steps are arrived at in the first page. What are \(R_X (y), R'_X (y),F'_X (0) ? \)How \(R_X (0) = 1 ?\) Solution to differential equation should be \(R_X (y)=K*e^{\int{R'_X (0) dx}}\) But it is different. How is that?

What is $-R'_X (0)=F'_X(0)=f_X(0)$ I know derivative of CDF is PDF, but in this case it is somewhat difficult to understand.

If any member of MHB knows how to satisfy my queries correctly, may reply to this question :unsure:

:unsure:

I want to know how the highlighted steps are arrived at in the first page. What are \(R_X (y), R'_X (y),F'_X (0) ? \)How \(R_X (0) = 1 ?\) Solution to differential equation should be \(R_X (y)=K*e^{\int{R'_X (0) dx}}\) But it is different. How is that?

What is $-R'_X (0)=F'_X(0)=f_X(0)$ I know derivative of CDF is PDF, but in this case it is somewhat difficult to understand.

If any member of MHB knows how to satisfy my queries correctly, may reply to this question