evinda

Gold Member

MHB

- 3,741

- 0

Hello! (Wave)

The following algorithm is given:

View attachment 7522

And it says the following:

View attachment 7523

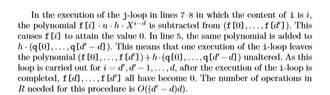

First of all, at the first line do they mean that the content of j is i?

About the second line, why don't we subtract the polynomial $f \cdot u \cdot h \cdot X^i $ from $(f[0], \dots, f[d'])$?

Is there then maybe an error at the algorithm at the line 8? Because at the first iteration for i=d' and j=i=d' we would get f[d']<-f[d']-uf[d']h[d'], but h[d'] is 0, when we suppose that d<d'. So should it maybe be f[j]<-f[j]-ah[j-d] ?Also, what do they mean at the part <<In line 5... unaltered.>> ? At line 5 of the algorithm, we have a<-u*f. Why is it said that the polynomial $f \cdot u \cdot h \cdot X^{i-d}$ is added to $h \cdot (q[0], \dots, q[d'-d])$ ? (Thinking)

The following algorithm is given:

View attachment 7522

And it says the following:

View attachment 7523

First of all, at the first line do they mean that the content of j is i?

About the second line, why don't we subtract the polynomial $f \cdot u \cdot h \cdot X^i $ from $(f[0], \dots, f[d'])$?

Is there then maybe an error at the algorithm at the line 8? Because at the first iteration for i=d' and j=i=d' we would get f[d']<-f[d']-uf[d']h[d'], but h[d'] is 0, when we suppose that d<d'. So should it maybe be f[j]<-f[j]-ah[j-d] ?Also, what do they mean at the part <<In line 5... unaltered.>> ? At line 5 of the algorithm, we have a<-u*f. Why is it said that the polynomial $f \cdot u \cdot h \cdot X^{i-d}$ is added to $h \cdot (q[0], \dots, q[d'-d])$ ? (Thinking)

Attachments

Last edited: