Homework Help Overview

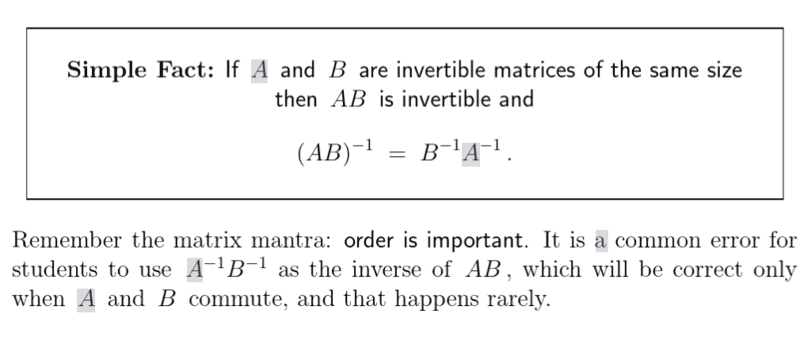

The discussion revolves around the concept of commuting matrices, specifically the conditions under which two matrices A and B commute, meaning that A·B = B·A. Participants are exploring the implications of this property and its relation to matrix inversion and identity matrices.

Discussion Character

- Conceptual clarification, Mathematical reasoning

Approaches and Questions Raised

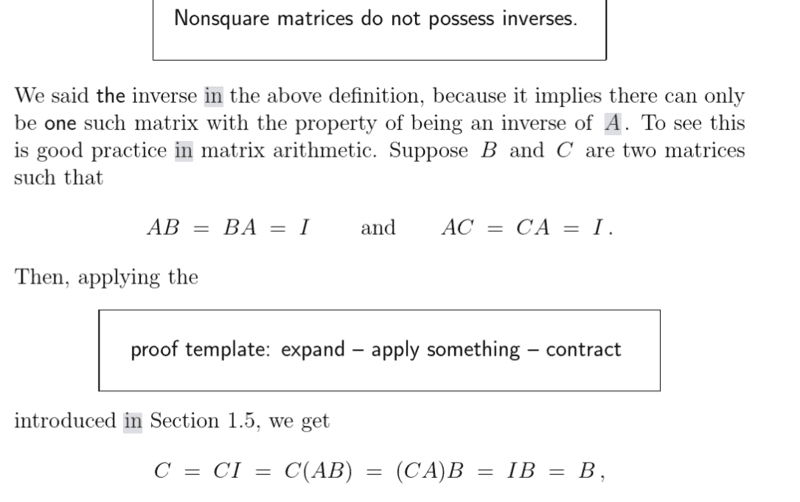

- Participants are questioning the origin of the equation C = CI and seeking clarification on the meaning of commuting matrices. There are attempts to verify mathematical statements regarding matrix inverses and identities.

Discussion Status

Some participants have provided definitions and properties related to commuting matrices, while others are engaging in verification of their understanding through specific examples and checks. The discussion appears to be productive, with various interpretations and mathematical reasoning being explored.

Contextual Notes

There is a focus on the properties of matrix multiplication and the uniqueness of inverses and identity matrices. Participants are encouraged to explore these concepts further without reaching definitive conclusions.