Discussion Overview

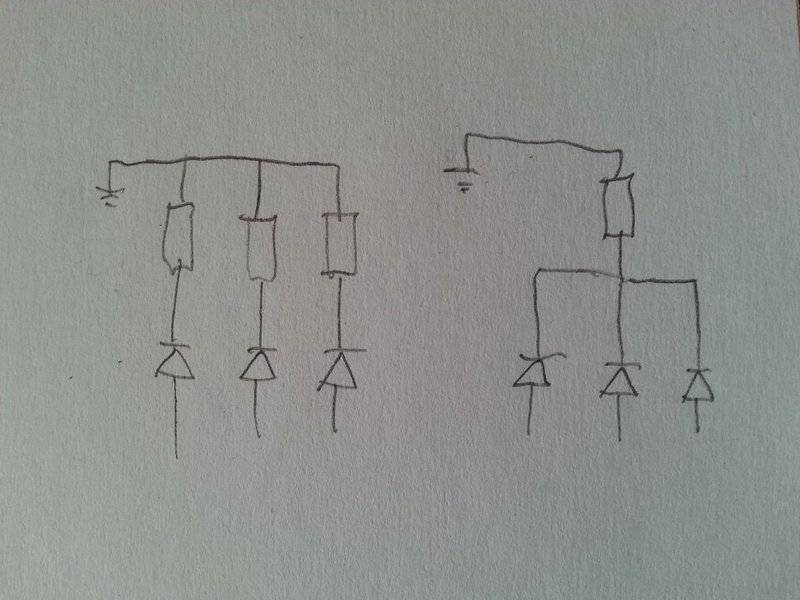

The discussion centers around the necessity of using resistors in series with LEDs in a circuit, particularly in the context of using Arduino. Participants explore whether a single resistor can be used for multiple LEDs or if separate resistors are required for each LED to ensure consistent brightness and prevent issues related to varying forward voltages.

Discussion Character

- Exploratory

- Technical explanation

- Debate/contested

Main Points Raised

- One participant notes that while a resistor is needed to limit current, it is unclear why separate resistors are commonly used for each LED, suggesting that a single resistor might suffice.

- Another participant explains that if multiple diodes are connected in parallel with a single resistor, differing forward voltages can lead to uneven brightness and potential runaway current in the lower voltage diodes.

- A different viewpoint suggests that well-matched LEDs can be connected with a single resistor or current driver, but general batches of LEDs may not be well-matched, leading to only a few being at full brightness.

- One participant emphasizes that the voltage seen by the LEDs would vary based on how many are lit, indicating that each LED should receive the necessary voltage regardless of the state of others.

- Another participant concurs that using well-matched LEDs in series may be practical, but parallel connections could lead to issues.

- A later reply expresses a desire to understand the reasoning behind the use of resistors rather than simply accepting it as a solution.

Areas of Agreement / Disagreement

Participants generally agree that separate resistors are preferable for most cases, particularly with mismatched LEDs, but there is some discussion about the conditions under which a single resistor might be acceptable. The discussion remains unresolved regarding the best practices for different types of LED circuits.

Contextual Notes

Participants mention that the performance of LEDs can depend on their matching and the specific circuit configuration, indicating that assumptions about uniformity and voltage distribution may not hold in all scenarios.