- #1

Math Amateur

Gold Member

MHB

- 3,990

- 48

I am reading Bruce N. Coopersteins book: Advanced Linear Algebra (Second Edition) ... ...

I am focused on Section 10.1 Introduction to Tensor Products ... ...

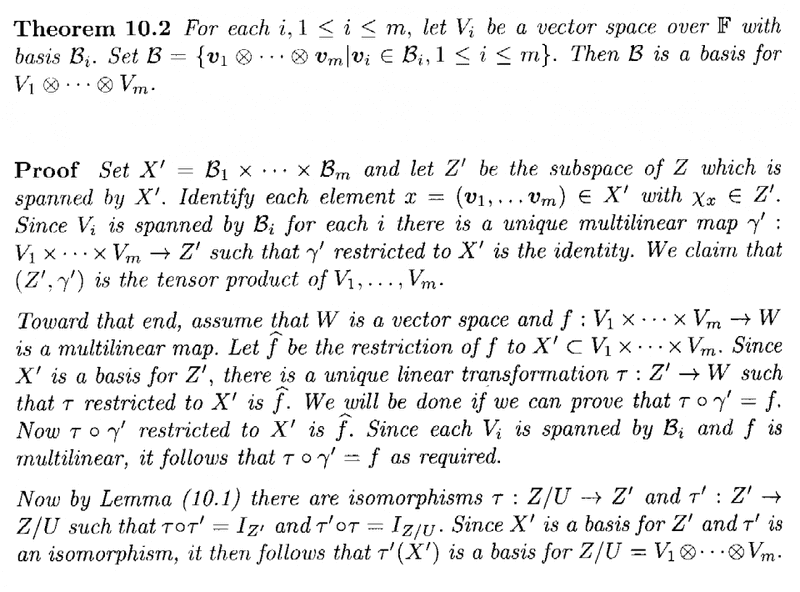

I need help with an aspect of Theorem 10.2 regarding the basis of a tensor product ... ...Theorem 10.2 reads as follows:

I do not follow the proof of this Theorem as it appears to me that [itex]Z'[/itex], the space spanned by [itex]X'[/itex] is actually equal to [itex]Z[/itex] ... but the Theorem implies it is a proper subset ... otherwise why mention [itex]Z'[/itex] at all ...I will explain my confusion by taking a simple example involving two vector spaces [itex]V[/itex] and [itex]W[/itex] where [itex]V[/itex] has basis [itex]B_1 = \{ v_1, v_2, v_3 \}[/itex] and [itex]W[/itex] has basis [itex]B_2 = \{ w_1, w_2 \} [/itex] ... ...So ... following the proof we set [itex]B = \{ v \otimes w \ | \ v \in B_1, w \in B_2 \}[/itex] ... ...Elements of [itex]B[/itex] then are ... ... [itex]v_1 \otimes w_1, \ v_1 \otimes w_2, \ v_2 \otimes w_1, \ v_2 \otimes w_2, \ v_3 \otimes w_1, \ v_3 \otimes w_2[/itex] ... ...and we have[itex]X' = B_1 \times B_2 [/itex]

[itex]= \{ (v_1, w_1) , \ (v_1, w_2) , \ (v_2, w_1) , \ (v_2, w_2) , \ (v_3, w_1) , \ (v_3, w_2) \} [/itex]Now [itex]Z'[/itex] is the subspace of [itex]Z[/itex] spanned by [itex]X'[/itex] ... ...But [itex]B_1[/itex] spans [itex]V[/itex] and [itex]B_2[/itex] spans [itex]W[/itex] ... ... so surely [itex]B_1 \times B_2[/itex] spans [itex]V \times W[/itex] ... ...

... BUT ... this does not seem to be what the Theorem implies (although it is possible under the proof ...)

Can someone please clarify the above for me and critique my example ... how is [itex]Z'[/itex] a proper subset of [itex]Z[/itex] ...?Hope someone can help ...

Peter

I am focused on Section 10.1 Introduction to Tensor Products ... ...

I need help with an aspect of Theorem 10.2 regarding the basis of a tensor product ... ...Theorem 10.2 reads as follows:

I do not follow the proof of this Theorem as it appears to me that [itex]Z'[/itex], the space spanned by [itex]X'[/itex] is actually equal to [itex]Z[/itex] ... but the Theorem implies it is a proper subset ... otherwise why mention [itex]Z'[/itex] at all ...I will explain my confusion by taking a simple example involving two vector spaces [itex]V[/itex] and [itex]W[/itex] where [itex]V[/itex] has basis [itex]B_1 = \{ v_1, v_2, v_3 \}[/itex] and [itex]W[/itex] has basis [itex]B_2 = \{ w_1, w_2 \} [/itex] ... ...So ... following the proof we set [itex]B = \{ v \otimes w \ | \ v \in B_1, w \in B_2 \}[/itex] ... ...Elements of [itex]B[/itex] then are ... ... [itex]v_1 \otimes w_1, \ v_1 \otimes w_2, \ v_2 \otimes w_1, \ v_2 \otimes w_2, \ v_3 \otimes w_1, \ v_3 \otimes w_2[/itex] ... ...and we have[itex]X' = B_1 \times B_2 [/itex]

[itex]= \{ (v_1, w_1) , \ (v_1, w_2) , \ (v_2, w_1) , \ (v_2, w_2) , \ (v_3, w_1) , \ (v_3, w_2) \} [/itex]Now [itex]Z'[/itex] is the subspace of [itex]Z[/itex] spanned by [itex]X'[/itex] ... ...But [itex]B_1[/itex] spans [itex]V[/itex] and [itex]B_2[/itex] spans [itex]W[/itex] ... ... so surely [itex]B_1 \times B_2[/itex] spans [itex]V \times W[/itex] ... ...

... BUT ... this does not seem to be what the Theorem implies (although it is possible under the proof ...)

Can someone please clarify the above for me and critique my example ... how is [itex]Z'[/itex] a proper subset of [itex]Z[/itex] ...?Hope someone can help ...

Peter