Discussion Overview

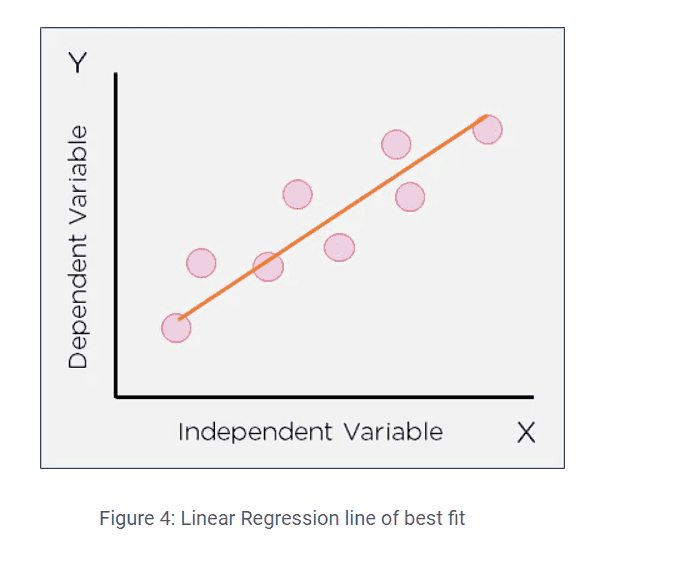

The discussion centers around the use of linear regression for classification tasks, particularly whether it can effectively predict categorical outcomes such as yes/no decisions. Participants explore the differences between linear regression and logistic regression, questioning the limitations and implications of using linear regression in this context.

Discussion Character

- Debate/contested

- Technical explanation

Main Points Raised

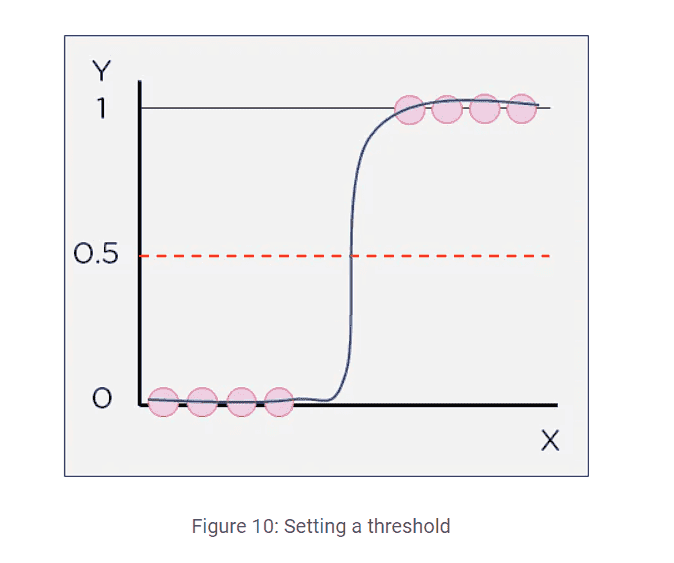

- Some participants argue that linear regression can be used to predict binary outcomes by establishing a threshold (e.g., if x > 0.5, then y = 1; otherwise, y = 0).

- Others point out that linear regression is traditionally used for continuous values, while logistic regression is designed for categorical outcomes, suggesting a fundamental difference in application.

- A participant mentions that using linear regression with nonlinear functions is possible, provided the model incorporates the parameters of linear regression appropriately.

- Concerns are raised about the validity of using linear regression for classification, particularly regarding the nature of the relationship between variables and the definition of categories.

- One participant critiques a visual example provided in the discussion, stating that it does not effectively illustrate a threshold curve due to the nature of the input data points.

Areas of Agreement / Disagreement

Participants express differing views on the appropriateness of using linear regression for classification. There is no consensus on whether it is a valid approach, with some supporting its use under certain conditions and others arguing against it based on the nature of linear relationships.

Contextual Notes

Participants highlight limitations related to the definition of categories and the nature of relationships in the context of using linear regression for classification. There are unresolved questions about the implications of branching in programming and its relevance to the discussion.