- #1

roam

- 1,271

- 12

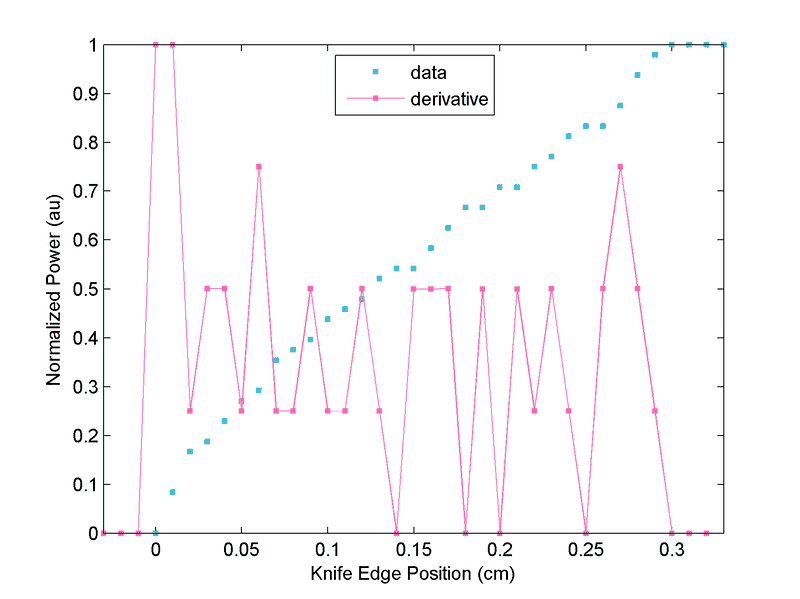

I am using the "knife-edge" technique to find the intensity profile of a rectangular laser beam. The data that is obtained using this method is power, the integral of intensity. Therefore, to get the intensity profile we must differentiate the data.

So, as expected, my data looks like a ramp (integral of a rectangular function). But when I performed the numerical differentiation on the data the result was too noisy:

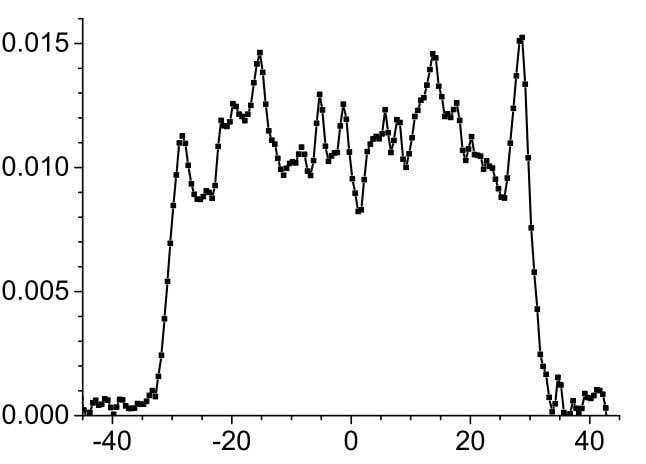

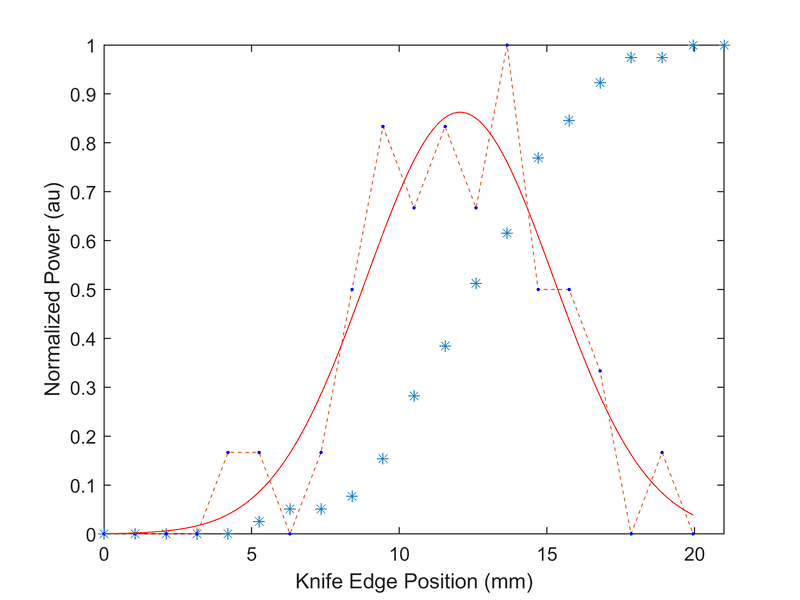

This doesn't really resemble the actual beam that we have. This is more like what is expected from a rectangular/tophat beam:

So, what kind of smoothing algorithm can I use on the differentiated data? How do we decide what smoothing would be the most appropriate and accurate in this situation?

Any help is greatly appreciated.

P. S. I could try to obtain more data points, but I am not sure if that would help. I've used this technique before on Gaussian beams (this time, the raw data was an error function erf) – I had far fewer data points, yet I didn't get this much noise. Why?

So, as expected, my data looks like a ramp (integral of a rectangular function). But when I performed the numerical differentiation on the data the result was too noisy:

This doesn't really resemble the actual beam that we have. This is more like what is expected from a rectangular/tophat beam:

So, what kind of smoothing algorithm can I use on the differentiated data? How do we decide what smoothing would be the most appropriate and accurate in this situation?

Any help is greatly appreciated.

P. S. I could try to obtain more data points, but I am not sure if that would help. I've used this technique before on Gaussian beams (this time, the raw data was an error function erf) – I had far fewer data points, yet I didn't get this much noise. Why?