user_01

- 5

- 0

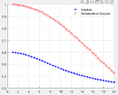

Given two i.i.d. random variables $X,Y$, such that $X\sim \exp(1), Y \sim \exp(1)$. I am looking for the probability $\Phi$. However, the analytical solution that I have got does not match with my simulation. I am presenting it here with the hope that someone with rectifies my mistake.

:

$$\Phi =\mathbb{P}\left[P_v \geq A + \frac{B}{Y}\right] $$

$$

P_v=

\left\{

\begin{array}{ll}

a\left(\frac{b}{1+\exp\left(-\bar \mu\frac{P_s X}{r^\alpha}+\varphi\right)}-1\right), & \text{if}\ \frac{P_s X}{r^\alpha}\geq P_a,\\

0, & \text{otherwise}.

\end{array}

\right.

$$

---

**My solution**

\begin{multline}

\Phi = \mathbb{P}\left[ a\left(\frac{b}{1+\exp\left(-\bar \mu\frac{P_s X}{r^\alpha}+\varphi\right)}-1\right) \geq A + \frac{B}{Y}\right]\mathbb{P}\left[X \geq \frac{P_ar^\alpha}{P_s}\right] + {\mathbb{P}\left[0 \geq A + \frac{B}{Y}\right] \mathbb{P}\left[X \geq \frac{P_ar^\alpha}{P_s}\right]}

\end{multline}

Given that $\mathbb{P}[0>A+\frac{B}{Y}] = 0$

$$ \Phi = \mathbb{P}\left[a\left(\frac{b}{1+\exp\left(-\bar \mu\frac{P_s X}{r^\alpha}+\varphi\right)}-1\right) \geq A + \frac{B}{Y}\right] \exp\left(-\frac{P_ar^\alpha}{P_s}\right) $$

consider $D = A + a$, $c = \frac{\bar{\mu}P_s}{r^{\alpha}}$

$$ \Phi = \mathbb{P} \left[Y \geq \frac{1}{ab}\mathbb{E}_Y[DY + B]. \mathbb{E}_X [1+e^\varphi e^{-c X}] \right] $$

$$\Phi = \exp\left(-\frac{D+B}{ab}\left(1 + \frac{e^\varphi}{1+c}\right)\right)\exp\left(-\frac{P_a r^\alpha}{P_s}\right) $$

:

$$\Phi =\mathbb{P}\left[P_v \geq A + \frac{B}{Y}\right] $$

$$

P_v=

\left\{

\begin{array}{ll}

a\left(\frac{b}{1+\exp\left(-\bar \mu\frac{P_s X}{r^\alpha}+\varphi\right)}-1\right), & \text{if}\ \frac{P_s X}{r^\alpha}\geq P_a,\\

0, & \text{otherwise}.

\end{array}

\right.

$$

---

**My solution**

\begin{multline}

\Phi = \mathbb{P}\left[ a\left(\frac{b}{1+\exp\left(-\bar \mu\frac{P_s X}{r^\alpha}+\varphi\right)}-1\right) \geq A + \frac{B}{Y}\right]\mathbb{P}\left[X \geq \frac{P_ar^\alpha}{P_s}\right] + {\mathbb{P}\left[0 \geq A + \frac{B}{Y}\right] \mathbb{P}\left[X \geq \frac{P_ar^\alpha}{P_s}\right]}

\end{multline}

Given that $\mathbb{P}[0>A+\frac{B}{Y}] = 0$

$$ \Phi = \mathbb{P}\left[a\left(\frac{b}{1+\exp\left(-\bar \mu\frac{P_s X}{r^\alpha}+\varphi\right)}-1\right) \geq A + \frac{B}{Y}\right] \exp\left(-\frac{P_ar^\alpha}{P_s}\right) $$

consider $D = A + a$, $c = \frac{\bar{\mu}P_s}{r^{\alpha}}$

$$ \Phi = \mathbb{P} \left[Y \geq \frac{1}{ab}\mathbb{E}_Y[DY + B]. \mathbb{E}_X [1+e^\varphi e^{-c X}] \right] $$

$$\Phi = \exp\left(-\frac{D+B}{ab}\left(1 + \frac{e^\varphi}{1+c}\right)\right)\exp\left(-\frac{P_a r^\alpha}{P_s}\right) $$