- #1

fog37

- 1,568

- 108

- TL;DR Summary

- Understand why L0 and L1 lead to the sparsest solution of a linear system of equations

Hello,

I think I understand the different types of norms that we can calculate for a vector living in a finite dimensional space. Each norm has its own benefits.

In 2D, for simplicity, all vectors with the same ##L2## norm are points on a circle (the isoline). All vectors with the same ##L1## are points on the diamond with vertices on the axes. and all vectors with the same ##L0## are points on

Question: what is the concept of regularization penalty that I have read about in regards to norms?

Question: in solving an underdetermined system of linear equations (more unknown that equations), why does looking looking for the ##l-1## norm promotes finding the sparsest solution?

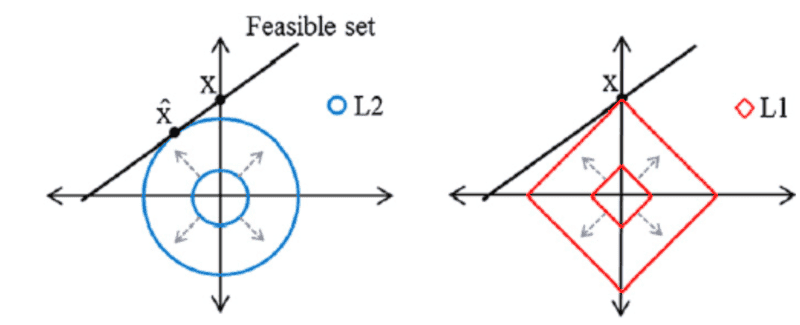

For example, in the figure below, the black straight line represents all possible and infinite solutions of the underdetermined system. We need to find a solution (which is where it the ball touches the black line) which is required to be sparse...The ##L1## solution is the sparsest since it has only one nonzero coordinate... But doesn't it all depend on the position of the black line of solutions? For example, the solution black line could be positioned in such a way that it perfectly overlaps one of the sides of the diamond. Or the black line could touch the circle (L2) where the solution is sparse if the black line was vertical or horizontal...I am confused...

The best norm, when looking for the sparsest solution of a system, is the L0 norm but it does not actually satisfy the property of being convex...

I think I understand the different types of norms that we can calculate for a vector living in a finite dimensional space. Each norm has its own benefits.

In 2D, for simplicity, all vectors with the same ##L2## norm are points on a circle (the isoline). All vectors with the same ##L1## are points on the diamond with vertices on the axes. and all vectors with the same ##L0## are points on

Question: what is the concept of regularization penalty that I have read about in regards to norms?

Question: in solving an underdetermined system of linear equations (more unknown that equations), why does looking looking for the ##l-1## norm promotes finding the sparsest solution?

For example, in the figure below, the black straight line represents all possible and infinite solutions of the underdetermined system. We need to find a solution (which is where it the ball touches the black line) which is required to be sparse...The ##L1## solution is the sparsest since it has only one nonzero coordinate... But doesn't it all depend on the position of the black line of solutions? For example, the solution black line could be positioned in such a way that it perfectly overlaps one of the sides of the diamond. Or the black line could touch the circle (L2) where the solution is sparse if the black line was vertical or horizontal...I am confused...

The best norm, when looking for the sparsest solution of a system, is the L0 norm but it does not actually satisfy the property of being convex...