- #1

Math Amateur

Gold Member

MHB

- 3,990

- 48

I am reading N. L. Carothers' book: "Real Analysis". ... ...

I am focused on Chapter 3: Metrics and Norms ... ...

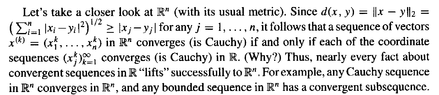

I need help with a remark by Carothers concerning convergent sequences in \mathbb{R}^n ...Now ... on page 47 Carothers writes the following:

View attachment 9216

In the above text from Carothers we read the following:

" ... ... it follows that a sequence of vectors \(\displaystyle x^{ (k) } = ( x_1^k, \ ... \ ... \ , x_n^k)\) in \(\displaystyle \mathbb{R}^n\) converges (is Cauchy) if and only if each of the coordinate sequences \(\displaystyle ( x_j^k )\) converges in \(\displaystyle \mathbb{R}\) ... ... "

My question is as follows:

Why exactly does it follow that a sequence of vectors \(\displaystyle x^{ (k) } = ( x_1^k, \ ... \ ... \ , x_n^k)\) in \(\displaystyle \mathbb{R}^n\) converges (is Cauchy) if and only if each of the coordinate sequences \(\displaystyle ( x_j^k )\) converges in \(\displaystyle \mathbb{R}\) ... ... ?

Help will be appreciated ...

Peter

I am focused on Chapter 3: Metrics and Norms ... ...

I need help with a remark by Carothers concerning convergent sequences in \mathbb{R}^n ...Now ... on page 47 Carothers writes the following:

View attachment 9216

In the above text from Carothers we read the following:

" ... ... it follows that a sequence of vectors \(\displaystyle x^{ (k) } = ( x_1^k, \ ... \ ... \ , x_n^k)\) in \(\displaystyle \mathbb{R}^n\) converges (is Cauchy) if and only if each of the coordinate sequences \(\displaystyle ( x_j^k )\) converges in \(\displaystyle \mathbb{R}\) ... ... "

My question is as follows:

Why exactly does it follow that a sequence of vectors \(\displaystyle x^{ (k) } = ( x_1^k, \ ... \ ... \ , x_n^k)\) in \(\displaystyle \mathbb{R}^n\) converges (is Cauchy) if and only if each of the coordinate sequences \(\displaystyle ( x_j^k )\) converges in \(\displaystyle \mathbb{R}\) ... ... ?

Help will be appreciated ...

Peter