- #1

mathmari

Gold Member

MHB

- 5,049

- 7

Hey!

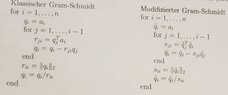

We have the following two versions of Gram Schmidt orthogonalization method:

View attachment 8762

Show that both algorithms are mathematically equivalent, i.e. $s_{ji}=r_{ji}$ for all $j\leq i$ and $\tilde{q}_i=q_i, i=1, \ldots , n$ for the output vectors.

I have done the following:

The difference of the two algorithms is the inner for loop, the definition of $r_{ji}$ and $s_{ji}$ respectively, or not?

If we show that these two are the same then it follows that $\tilde{q}_i=q_i$, doesn't it?

But how can we show that $r_{ji}=s_{ji}$ ? Could you give me a hint?

(Wondering)

We have the following two versions of Gram Schmidt orthogonalization method:

View attachment 8762

Show that both algorithms are mathematically equivalent, i.e. $s_{ji}=r_{ji}$ for all $j\leq i$ and $\tilde{q}_i=q_i, i=1, \ldots , n$ for the output vectors.

I have done the following:

The difference of the two algorithms is the inner for loop, the definition of $r_{ji}$ and $s_{ji}$ respectively, or not?

If we show that these two are the same then it follows that $\tilde{q}_i=q_i$, doesn't it?

But how can we show that $r_{ji}=s_{ji}$ ? Could you give me a hint?

(Wondering)