- #1

BWV

- 1,465

- 1,781

Interesting paper here:

https://colala.bcs.rochester.edu/papers/piantadosi2018one.pdf

which using a closed-form solution to the logistic map m(z) = 4z(1−z) of

mk (θ) = sin2 [ 2k arcsin √ θ]

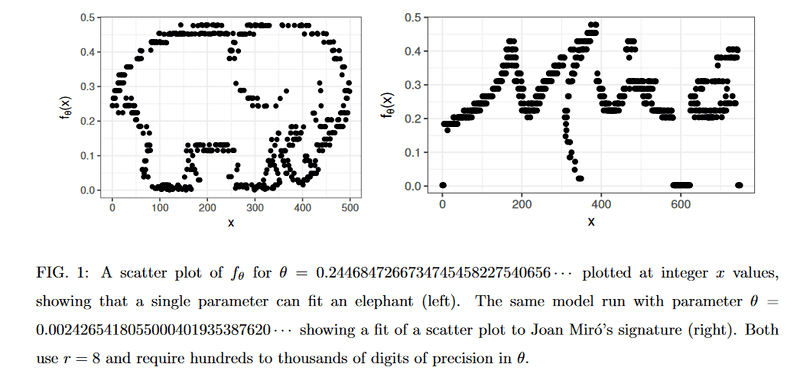

with a finely tuned parameter θ (extending to hundreds of decimal places) can fit just about any 2 dimensional shape such as:

https://colala.bcs.rochester.edu/papers/piantadosi2018one.pdf

which using a closed-form solution to the logistic map m(z) = 4z(1−z) of

mk (θ) = sin2 [ 2k arcsin √ θ]

with a finely tuned parameter θ (extending to hundreds of decimal places) can fit just about any 2 dimensional shape such as: