Calculuser

- 49

- 3

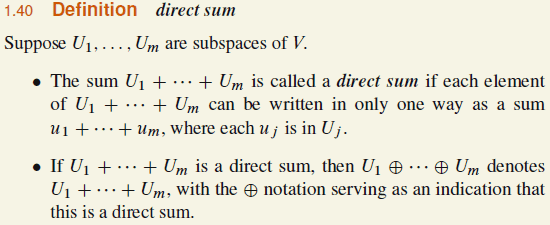

In "Sheldon Axler's Linear Algebra Done Right, 3rd edition", on page 21 "internal direct sum", or direct sum as the author uses, is defined as such:

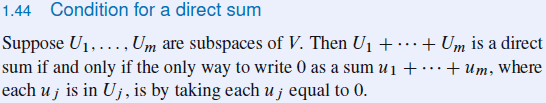

Following that there is a statement, titled "Condition for a direct sum" on page 23, that specifies the condition for a sum of subspaces to be internal direct sum. In proof the author proves the uniqueness of this condition as far as I get, which is understandable, but I do not think that proves the statement itself:

Question 1: How can we prove that checking if the only way to write ##0## vector as the sum of ##u_1+u_2+...+u_m## where each ##u_i\in U_i## is taking each ##u_i## equal to ##0##? is sufficient for a sum of subspaces to be internal direct sum?

Question 2: Is it possible for some of those subspaces ##U_1, U_2, . . . , U_m## have some elements in common other than ##0## vector while their summation still produces an internal direct sum? If not, why?

Following that there is a statement, titled "Condition for a direct sum" on page 23, that specifies the condition for a sum of subspaces to be internal direct sum. In proof the author proves the uniqueness of this condition as far as I get, which is understandable, but I do not think that proves the statement itself:

Question 1: How can we prove that checking if the only way to write ##0## vector as the sum of ##u_1+u_2+...+u_m## where each ##u_i\in U_i## is taking each ##u_i## equal to ##0##? is sufficient for a sum of subspaces to be internal direct sum?

Question 2: Is it possible for some of those subspaces ##U_1, U_2, . . . , U_m## have some elements in common other than ##0## vector while their summation still produces an internal direct sum? If not, why?