- #1

kostoglotov

- 234

- 6

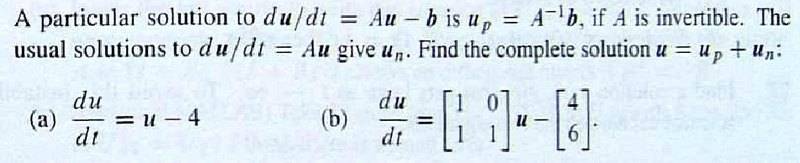

The problem is here, I'm trying to solve (b):

imgur link: http://i.imgur.com/ifVm57o.jpg

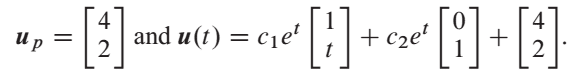

and the text solution is here:

imgur link: http://i.imgur.com/qxPuMpu.pngI understand why there is a term in there with [itex]cte^t[/itex], it's because the A matrix has double roots for the eigenvalues. What I don't understand is where the (apparent) second eigenvector, [itex]

\begin{bmatrix}1\\ t\end{bmatrix}[/itex] is coming from?

I gave my answer as [itex]\vec{u} = \begin{bmatrix}4\\ 2\end{bmatrix} + c_1e^t\begin{bmatrix}0\\ 1\end{bmatrix}+c_2te^t\begin{bmatrix}0\\ 1\end{bmatrix}[/itex].

This answer works, but so does the text answer, and it is more complete. But where did that second distinct eigenvector come from?

imgur link: http://i.imgur.com/ifVm57o.jpg

and the text solution is here:

imgur link: http://i.imgur.com/qxPuMpu.pngI understand why there is a term in there with [itex]cte^t[/itex], it's because the A matrix has double roots for the eigenvalues. What I don't understand is where the (apparent) second eigenvector, [itex]

\begin{bmatrix}1\\ t\end{bmatrix}[/itex] is coming from?

I gave my answer as [itex]\vec{u} = \begin{bmatrix}4\\ 2\end{bmatrix} + c_1e^t\begin{bmatrix}0\\ 1\end{bmatrix}+c_2te^t\begin{bmatrix}0\\ 1\end{bmatrix}[/itex].

This answer works, but so does the text answer, and it is more complete. But where did that second distinct eigenvector come from?