Devin-M

- 1,076

- 765

In this MIT course video, he shows on an optical table that in certain cases, when destructive interference occurs the light is going back to the laser (fast forward to 5:22 in the video below)

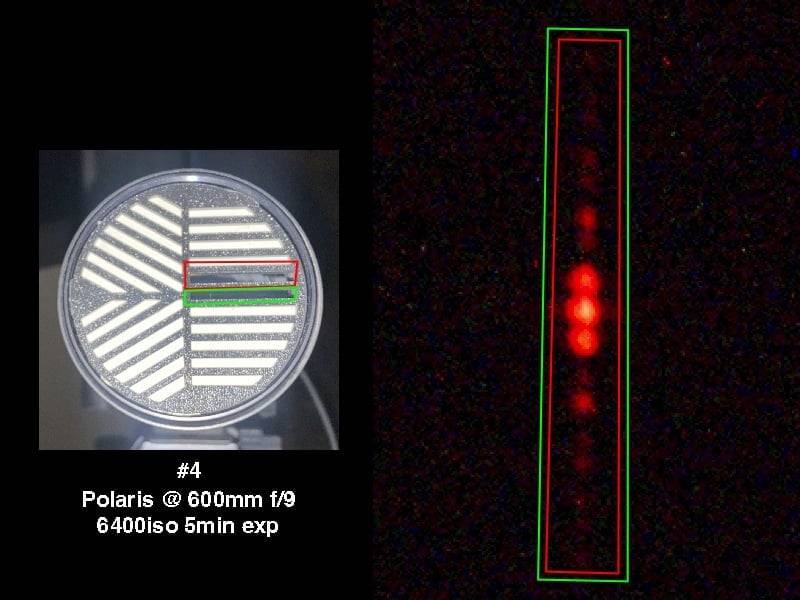

My question is when I do a double slit experiment with starlight by putting 2 slits in front of my objective lens as shown below, is the light in the areas showing destructive interference being reflected back to the star to maintain conservation of energy?

*H-Alpha narrowband filter (656nm +/- 3nm was used in front of the sensor)

My question is when I do a double slit experiment with starlight by putting 2 slits in front of my objective lens as shown below, is the light in the areas showing destructive interference being reflected back to the star to maintain conservation of energy?

*H-Alpha narrowband filter (656nm +/- 3nm was used in front of the sensor)

Last edited: