tworitdash

- 104

- 25

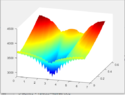

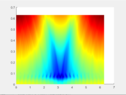

I have a likelihood function that has one global minima, but a lot of local ones too. I attach a figure with the likelihood function in 2D (it has two parameters). I have added a 3D view and a surface view of the likelihood function. I know there are many global optimizers that can be used to obtain the location of the global minimum point in the likelihood function. However, I want to know what basic optimizer principles that I can use (that I can also derive and implement myself) for a problem like this. If you see the 3D view, you may find many local minima. I am also open to suggestions that involve Bayesian type of optimization where I will get a posterior and not just a point estimate. I am open to that as well. I have tried MCMC type sampling optimization, however, they are computationally expensive. The number of parameters may increase later.