mitchell porter said:

Something that troubles me, is that every explanation we have for the Koide formula seems to be at odds with Higgs criticality, in that the latter suggests that physics is just standard model up to high scales, whereas the explanations for Koide involve new physics at low scales. See

Koide's remarks from January. He says one may think of the formula as holding approximately among running masses, or exactly among pole masses. If we focus just on well-defined field theories that have been written out, they all involve new physics (e.g. Koide's yukawaon fields, the vevs of which contribute to the SM yukawas). In the case of the Sumino mechanism for the pole masses, there are family gauge bosons which are supposed to show up by 10

4 TeV, i.e. 10

7 GeV. If we focus just on the yukawaons... Koide

seems to have argued that new physics should show around 10

12 GeV. I would be a little happier with that, it's in the vicinity of the lowest-scale explanations of Higgs criticality.

But for this reason, I also wonder if we could do with a new, infrared perspective on the Higgs mechanism.

The most recent paper by Arkani-Hamed et al actually provides such a perspective, but only for gauge boson mass, not for fermion mass.

Simply relying on new physics, in and of itself, isn't very troubling because this is an area where new physics wouldn't contract the Standard Model, it would merely fill in a gap where the Standard Model provides no explanation and instead resorts to determining the values of constants experimentally with a theory.

Furthermore, I would say that of people who are familiar with the Standard Model almost nobody thinks that the values of the Standard Model experimentally measured constants are really arbitrary. Feynman said so in QED and a couple of his other books. I've seen at least a couple of other big name physicists reiterate that hypothesis, although I don't have references readily at hand. A few folks think that there is no deeper theory, and many don't think about the issue at all, but the vast majority of people who understand it believe in their heart of hearts that there is a deeper structure with some mechanism out there to find that we just haven't yet grasped.

But, the trick is how to come up with BSM physics that doesn't contradict the SM and reasonable inferences from it to explain these constants. How can we construct new physics to explain the Standard Model constant values in some sector that doesn't screw up anything else?

The go to explanation the last two times we had a jumble of constants that needed to be explained - the Periodic Table and the Particle Zoo, ended up being resolved with preon-like theories the cut through a mass of fundamental constants by showing that they were derived from a smaller number of more fundamental components. And, one could conceive of a theory that could do that - I've seen just one reasonably successful effect at doing so by a Russian theoretical physicist,

V. N. Yershov - but the LHC bounds on compositeness (which admittedly have some model dependence) are very, very stiff. Preons wouldn't screw anything else up, although they might require a new boson to carry an "ultra-strong force" that binds the preons.

I am not very impressed with the yukawaon approach, or Sumino's mechanism. They are baroque and not very well motivated and, as you note, involve low scale new physics where it is hard to believe that we could have missed anything so profound.

As you know, I am on record as thinking that Koide's rule and the quark mass hierarchy emerge dynamically through a mechanism mediated by the W boson, which is very clean in the case of the charged leptons with only three masses to balance and a situation where a W boson can turn anyone of the three into anyone of the remaining two (conservation of mass-energy permitting). The situation is messier with the quarks where any given quark can by transformed via the W into one of three other kinds of quarks (but not five other kinds of quarks in one hop), and where there is not a quark equivalent to lepton universality due to the structure of the CKM matrix.

In this analysis, the Higgs vev is out there setting the overall scale of the fundamental fermion and boson masses, the Higgs boson mass is perhaps most easily understood as a gap filling process of elimination result after all other fundamental boson masses have been set, and the W boson plays a key role in divvying up the overall mass allowed to the fermion sector among the responsive quarks, and separately among the respective charged leptons (and perhaps among the neutrinos as well - hard to know), maybe it even plays a role in divvying up the overall mass allowed to the fermion sector between quarks and leptons (as suggested in some extended Koide rule analysis).

That description, of course, is in some ways heuristic. It still needs to produce a model in which the Higgs boson couples to each fundamental particle of the Standard Model (except photons, gluons and possibly also except neutrinos), in proportion to the rest mass of each, so the focuses on the Higgs yukawas and the the W boson interactions respectively have to both be true to some extent in any theory, it is just a matter of which perspective provides "the most information for free" which is what good theories do.

Humans like to impute motives to processes even when they are in equilibrium and interdependent. We like to say either that the Higgs boson causes fundamental particle masses, or the the W boson does, or that fundamental particle masses are tied to their self-interaction plus an excitation factor for higher generations, or what have you.

But, these anthropomorphic imputations of cause and effect and motive may be basically category errors in the same way that it really isn't accurate to say that the length of the hypotenuse of a right triangle is caused by the length of its other two sides. Yes, there is an equation that relates the length of the three sides of a right triangle to each other, and yes, knowing any two, you can determine the third, but it isn't really correct to say that there are lines of causation that run in any particular direction (or alternatively, you could say that the lines of causation run both ways and are mutual). I suspect that the relationships between the Standard Model constants is going to be something like that which is just the kind of equation that Koide's rule involves.

Of course, this dynamic balancing hypothesis I've suggested is hardly the only possible way to skin the cat. (Is it not PC to say that anymore?).

Indeed, from the point of view of natural philosophy and just good hypothesis generation, one way to identify a really good comprehensive and unified theory is that its predictions are

overdetermined such that there are multiple independent ways to accomplish the same result that must necessarily all be true for the theory to hold together.

In other words, for example, there really ought to be more than one more or less independent ways to determine the Higgs boson mass from first principles in really good theory. So: (1) maybe one way to determine the Higgs boson mass is to

start at a GUT scale where it has a boundary mass value of zero in a metastable universe and track its beta function back to its pole mass (also

here) and (2) another way ought to be to start with

half the of the square of the Higgs vev and then subtract out the square of the W and Z boson masses and take the square root, and (3) another way ought to be with the fine tuned kind of calculations that give rise to the "

hierarchy problem", and (4) maybe another looks at the relationship between the top quark mass, the W boson mass and the Higgs boson mass

in electroweak theory, and (5) another might look to

self-interactions via fundamental forces (also

here) as establishing the first generation and fundamental boson masses and come up with a way of seeing the second and third generations as the only possible mathematically consistent excitations of first generation masses derived from self-interactions (somewhat along the same lines is this

global mass trend line), and (6) another might start with half of the Higgs vev as

a "tree level" value of the "bare" Higgs boson mass and make high loop corrections (something similar is found

here) and (7) maybe there is a deeper theory that gives significance to the fact that the measured Higgs boson mass is very nearly

the mass that minimizes the second loop corrections necessary to convert the mass of a gauge boson from an MS scheme to a pole mass scheme, (8) maybe there is something related to the fact that

the Higgs boson mass appears to maximize its decay rate into photons, and (9) maybe there ought to be some other way as well that starts with constraints particular to massive spin-0 even parity objects in general using the kind of methodology in

the paper below then limits that parameter space using measured values of the Standard Model coupling constants and maybe a gravitational coupling constants such that any quark mass (since quarks interact with all three Standard Model forces plus gravity) could be used to fix its value subject to those constraints.

"Magically," maybe all nine of those methods might produce the same Higgs boson mass prediction despite not having obvious derivations from each other. The idea is not that any of (1) to (9) are actually correct descriptions of the real world source of the Higgs boson mass, but to illustrate what a correct

overdetermined theory might "feel" like.

There might be nine independent correct ways to come up with a particular fundamental mass that all have to be true for the theory to hold together making these values the only possible one that a consistent TOE that adhere to a handful of elementary axioms could have, in sort of the polar opposite of a many universes scenario where every physical constant is basically random input into some Creator God's computer simulation and we just ended up living in one of them.

In particular, I do think that at least some of the approaches to an overdetermined Higgs mechanism may indeed involve something that make sense on an infrared scale, rather than relying on new particles or forces at a UV scale as so much of the published work tends to do.

Relations like L & CP and Koide's rule and the fact that the Higgs mass is such that it doesn't require UV completion to be

unitary and analytic up to the GUT scale and the fact that the top quark width fits the SM prediction as do the Higgs boson branching fractions and the electron g-2 all point to a conclusion that the SM is or very nearly is a complete set of fundamental particles.

Even the

muon g-2 discrepancy is pretty small - the measured value and the computed one (0.0011659209 versus 0.0011659180) are identical down to one part per 1,000,000, so there can't be that many missing particles contributing loops that are missing from the Standard Model computation. We are talking about a discrepancy of 29 * 10^-10 in the value. Maybe that difference really is three sigma (and not just a case were somebody has underestimated the one of the systemic errors in the measurement by a factor O(1) or O(10) or so) and something that points at BSM physics, but it sure doesn't feel like we are on the brink of discovering myriad new BSM particles in the UV as null search after null search at the LHC seem to confirm.

Too many of the process me measure in HEP are sensitive to the global content of the model (including the UV part to very high scales given the precision of our measurements) because of the way that so many of the observables are functions of all possible ways that something could happen for us to be missing something really big while we fail to see BSM effects almost anywhere while doing lots and lots and lots of experimental confirmations of every conceivable kind.

Also FWIW, the latter paper that you reference (79 pages long) has the following abstract:

Scattering Amplitudes For All Masses and Spins

Nima Arkani-Hamed,

Tzu-Chen Huang,

Yu-tin Huang

(Submitted on 14 Sep 2017)

We introduce a formalism for describing four-dimensional scattering amplitudes for particles of any mass and spin. This naturally extends the familiar spinor-helicity formalism for massless particles to one where these variables carry an extra SU(2) little group index for massive particles, with the amplitudes for spin S particles transforming as symmetric rank 2S tensors. We systematically characterise all possible three particle amplitudes compatible with Poincare symmetry. Unitarity, in the form of consistent factorization, imposes algebraic conditions that can be used to construct all possible four-particle tree amplitudes. This also gives us a convenient basis in which to expand all possible four-particle amplitudes in terms of what can be called "spinning polynomials". Many general results of quantum field theory follow the analysis of four-particle scattering, ranging from the set of all possible consistent theories for massless particles, to spin-statistics, and the Weinberg-Witten theorem. We also find a transparent understanding for why massive particles of sufficiently high spin can not be "elementary". The Higgs and Super-Higgs mechanisms are naturally discovered as an infrared unification of many disparate helicity amplitudes into a smaller number of massive amplitudes, with a simple understanding for why this can't be extended to Higgsing for gravitons. We illustrate a number of applications of the formalism at one-loop, giving few-line computations of the electron (g-2) as well as the beta function and rational terms in QCD. "Off-shell" observables like correlation functions and form-factors can be thought of as scattering amplitudes with external "probe" particles of general mass and spin, so all these objects--amplitudes, form factors and correlators, can be studied from a common on-shell perspective.

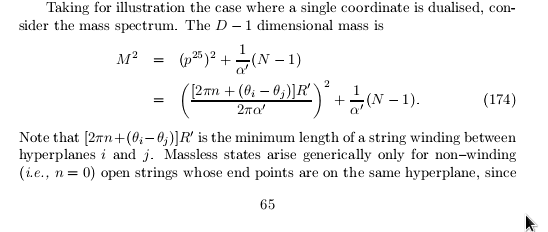

but let's say that it is just to test how compatible Koide formula is. Remember that the standard formula is m_0(1+\mu_k)^2, with conditions \sum \mu_k = 0, \sum (\mu_k^2 - 1) =0. Here, for the special case of three parallel branes, we have from the construction that \lambda_3 = \lambda_2+\lambda_1. I am not sure of how freely we can change the sign of a distance between branes; the formula apparently allows for it but it would need more discussion, so let's simply to investigate the case for wrapping n_3=0, so that we can freely flip \lambda_3 \to - \lambda_3 and grant \lambda_3 + \lambda_2+\lambda_1 =0 and let's put the other two wrappings at the same level n=1. With this, Koide formula should be

but let's say that it is just to test how compatible Koide formula is. Remember that the standard formula is m_0(1+\mu_k)^2, with conditions \sum \mu_k = 0, \sum (\mu_k^2 - 1) =0. Here, for the special case of three parallel branes, we have from the construction that \lambda_3 = \lambda_2+\lambda_1. I am not sure of how freely we can change the sign of a distance between branes; the formula apparently allows for it but it would need more discussion, so let's simply to investigate the case for wrapping n_3=0, so that we can freely flip \lambda_3 \to - \lambda_3 and grant \lambda_3 + \lambda_2+\lambda_1 =0 and let's put the other two wrappings at the same level n=1. With this, Koide formula should be