- #1

- 5,844

- 550

See the passage attached below.

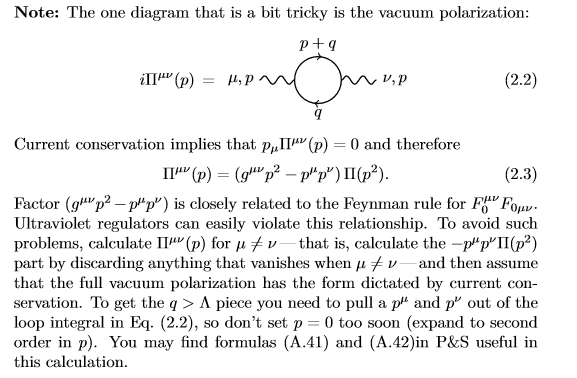

Consider the 1-loop vertex correction (c.f. p.2 of http://bolvan.ph.utexas.edu/~vadim/classes/2012f/vertex.pdf) and vacuum polarization diagrams in QED. A very simple UV regulator that makes the integrals for the amplitude very simple is the prescription that we take an arbitrary high-energy cutoff ##\Lambda_0## for a theory ##\mathcal{L}_0##, introduce another cutoff ##\Lambda < \Lambda_0##, compute the corrections to the amplitude for ##q > \Lambda##, and add the corrections into the effective lagrangian ##\mathcal{L}_{\Lambda}## using running couplings in the local field operators corresponding to the corrected diagrams.

In the case of the vertex correction diagram the ##q > \Lambda## regulator clearly breaks Lorentz invariance and in the case of the vacuum polarization diagram it breaks gauge invariance because in the latter case we integrate over charged Fermion momenta whereas in the former case we only integrate over photon momenta.

What I don't understand is why in the first case the lack of Lorentz invariance in the regulator does not pose any problems in the calculation of the amplitude and everything goes through easily with the simple Taylor expansion method of evaluating the loop whereas in the second case the lack of gauge invariance does pose a problem if one uses this regulator.

As I understand it this regulator generates a renormalized mass term for the photon but I neither understand where it comes from nor why it poses a problem for the amplitude itself since one can go ahead and easily calculate it using the same Taylor expansion method as for the other diagram.

Could someone explain this? Thanks in advance!

Consider the 1-loop vertex correction (c.f. p.2 of http://bolvan.ph.utexas.edu/~vadim/classes/2012f/vertex.pdf) and vacuum polarization diagrams in QED. A very simple UV regulator that makes the integrals for the amplitude very simple is the prescription that we take an arbitrary high-energy cutoff ##\Lambda_0## for a theory ##\mathcal{L}_0##, introduce another cutoff ##\Lambda < \Lambda_0##, compute the corrections to the amplitude for ##q > \Lambda##, and add the corrections into the effective lagrangian ##\mathcal{L}_{\Lambda}## using running couplings in the local field operators corresponding to the corrected diagrams.

In the case of the vertex correction diagram the ##q > \Lambda## regulator clearly breaks Lorentz invariance and in the case of the vacuum polarization diagram it breaks gauge invariance because in the latter case we integrate over charged Fermion momenta whereas in the former case we only integrate over photon momenta.

What I don't understand is why in the first case the lack of Lorentz invariance in the regulator does not pose any problems in the calculation of the amplitude and everything goes through easily with the simple Taylor expansion method of evaluating the loop whereas in the second case the lack of gauge invariance does pose a problem if one uses this regulator.

As I understand it this regulator generates a renormalized mass term for the photon but I neither understand where it comes from nor why it poses a problem for the amplitude itself since one can go ahead and easily calculate it using the same Taylor expansion method as for the other diagram.

Could someone explain this? Thanks in advance!