- #1

Math Amateur

Gold Member

MHB

- 3,998

- 48

I am reading D. J. H. Garling's book: "A Course in Mathematical Analysis: Volume II: Metric and Topological Spaces, Functions of a Vector Variable" ... ...

I am focused on Chapter 11: Metric Spaces and Normed Spaces ... ...

I need some help with an aspect of the proof of Theorem 11.4.1 ...

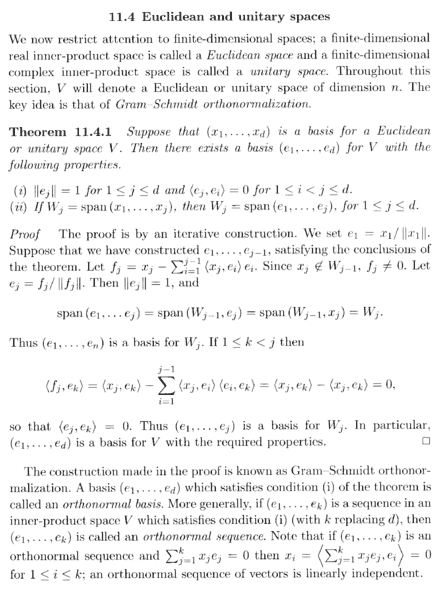

Garling's statement and proof of Theorem 11.4.1 reads as follows:

In the above proof by Garling we read the following:

" ... ... Let ##f_j = x_j - \sum_{ i = 1 }^{ j-1 } \langle x_j , e_i \rangle e_i##. Since ##x_j \notin W_{ j-1 }, f_j \neq 0##.

Let ##e_j = \frac{ f_j }{ \| f_j \| }## . Then ##\| e_j \| = 1## and

##\text{ span } ( e_1, \ ... \ ... \ e_j ) = \text{ span } ( W_{ j - 1 } , e_j ) = \text{ span }( W_{ j - 1 } , x_j ) = W_j##

... ... "

Can someone please demonstrate rigorously how/why##f_j = x_j - \sum_{ i = 1 }^{ j-1 } \langle x_j , e_i \rangle e_i##

and

##e_j = \frac{ f_j }{ \| f_j \| }##imply that##\text{ span } ( e_1, \ ... \ ... \ e_j ) = \text{ span } ( W_{ j - 1 } , e_j ) = \text{ span }( W_{ j - 1 } , x_j ) = W_j##

Help will be much appreciated ...

Peter

I am focused on Chapter 11: Metric Spaces and Normed Spaces ... ...

I need some help with an aspect of the proof of Theorem 11.4.1 ...

Garling's statement and proof of Theorem 11.4.1 reads as follows:

In the above proof by Garling we read the following:

" ... ... Let ##f_j = x_j - \sum_{ i = 1 }^{ j-1 } \langle x_j , e_i \rangle e_i##. Since ##x_j \notin W_{ j-1 }, f_j \neq 0##.

Let ##e_j = \frac{ f_j }{ \| f_j \| }## . Then ##\| e_j \| = 1## and

##\text{ span } ( e_1, \ ... \ ... \ e_j ) = \text{ span } ( W_{ j - 1 } , e_j ) = \text{ span }( W_{ j - 1 } , x_j ) = W_j##

... ... "

Can someone please demonstrate rigorously how/why##f_j = x_j - \sum_{ i = 1 }^{ j-1 } \langle x_j , e_i \rangle e_i##

and

##e_j = \frac{ f_j }{ \| f_j \| }##imply that##\text{ span } ( e_1, \ ... \ ... \ e_j ) = \text{ span } ( W_{ j - 1 } , e_j ) = \text{ span }( W_{ j - 1 } , x_j ) = W_j##

Help will be much appreciated ...

Peter