- #1

- 2,116

- 2,691

- TL;DR Summary

- How does a cell of SRAM work?

Summary: How does a cell of SRAM work?

I have computer science minor, and we have been given the task of finding out the differences between SRAM and DRAM. Simple enough? I thought so, until I got into the circuitry of a SRAM cell and got interested in finding how it works for storing, reading and writing data. Unfortunately I could not find a good website that explains this properly.

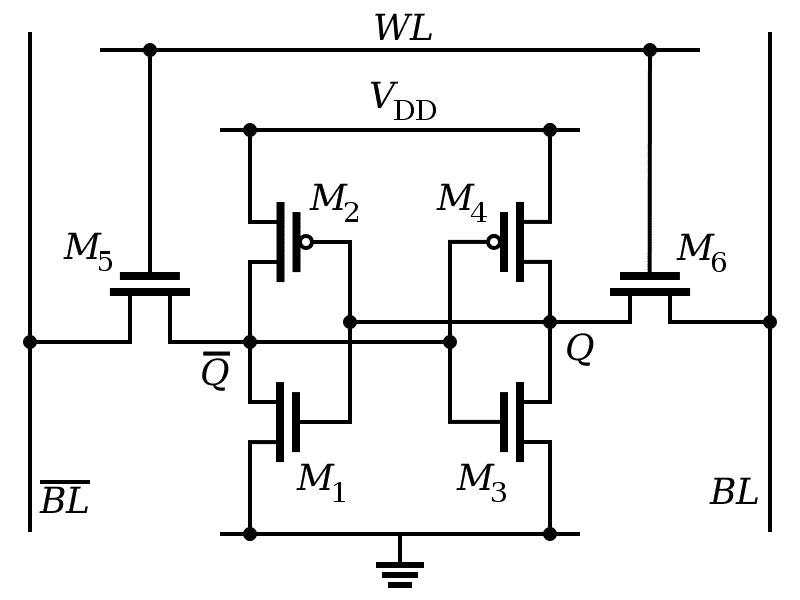

The diagram, taken from Wikipedia, is this one:

From Quora, M5 and M6 are access transistors, M2 and M4 pull up, M1 and M3 are pull down transistors. WL is probably a "word line" (read from somewhere else) which has to be high to allow a read and write operation.

On reading the explanation at Wikipedia, I have the following questions:

1. The M# are transistors, but why have they been drawn like that? We learned to draw transistors in a different way, isn't it?

2. Wikipedia states in the reading section,

I have computer science minor, and we have been given the task of finding out the differences between SRAM and DRAM. Simple enough? I thought so, until I got into the circuitry of a SRAM cell and got interested in finding how it works for storing, reading and writing data. Unfortunately I could not find a good website that explains this properly.

The diagram, taken from Wikipedia, is this one:

From Quora, M5 and M6 are access transistors, M2 and M4 pull up, M1 and M3 are pull down transistors. WL is probably a "word line" (read from somewhere else) which has to be high to allow a read and write operation.

On reading the explanation at Wikipedia, I have the following questions:

1. The M# are transistors, but why have they been drawn like that? We learned to draw transistors in a different way, isn't it?

2. Wikipedia states in the reading section,

Why will the voltage difference come?In theory, reading only requires asserting the word line WL and reading the SRAM cell state by a single access transistor and bit line, e.g. M6, BL. However, bit lines are relatively long and have large parasitic capacitance. To speed up reading, a more complex process is used in practice: The read cycle is started by precharging both bit lines BL and BL, to high (logic 1) voltage. Then asserting the word line WL enables both the access transistors M5 and M6, which causes one bit line BL voltage to slightly drop. Then the BL and BL lines will have a small voltage difference between them. A sense amplifier will sense which line has the higher voltage and thus determine whether there was 1 or 0 stored.

Last edited: