- #1

Ackbach

Gold Member

MHB

- 4,155

- 89

1. Prerequisites

In order to study integral calculus, you must have a firm grasp of differential calculus. You can go to

http://mathhelpboards.com/calculus-10/differential-calculus-tutorial-1393.html

for my differential calculus tutorial, although, of course, there are many books and other tutorials available as well. Note that the prerequisites I listed in my tutorial for differential calculus still apply. That is, integral calculus builds on and assumes differential calculus and all its prerequisites. So you still need your http://www.mathhelpboards.com/f2/algebra-dos-donts-1385/, geometry, and http://www.mathhelpboards.com/f12/trigonometry-memorize-trigonometry-derive-35/.

I already did an overview of integral calculus in the differential calculus tutorial, so I won't repeat it here. We'll just dive right in.

2. Area Under a Curve

From geometry, you should know how to compute areas of circles, rectangles, trapezoids, and other simple figures. But what is the area under the sine curve from $0$ to $\pi$? You won't learn that in geometry. The answer happens to be $2$. How did I get that?

2.1 Area Approximations

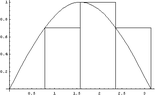

You can approximate the area under a curve by drawing a whole bunch of rectangles and adding up those areas. Let me illustrate: suppose we take our function $y=\sin(x)$, and take the interval $[0,\pi]$ and divide it up into four equal width sections. So we have $[0,\pi/4), [\pi/4,\pi/2), [\pi/2,3\pi/4),$ and $[3\pi/4,\pi]$. For each of these intervals, we draw a rectangle whose width is the width of the interval ($\pi/4$ for all four smaller intervals), and whose height is determined by the height of our function at the left-hand end of the interval. Here's a picture to illustrate:

https://www.physicsforums.com/attachments/322._xfImport

The left-most rectangle has no height because $\sin(0)=0$. So, what is the area of all those boxes? Well, we have a rather simple computation to make:

$$A\approx \sin(0)*( \pi/4)+ \sin( \pi/4)*( \pi/4)+ \sin( \pi/2)*( \pi/4)+ \sin(3 \pi/4)*( \pi/4)$$

$$=( \pi/4) \left(0+ \frac{\sqrt{2}}{2}+1+ \frac{\sqrt{2}}{2} \right)$$

$$= \frac{ \pi}{4} (1+\sqrt{2})$$

$$ \approx 1.896.$$

Not too bad (the percent error is $100 \% \cdot(2-1.896)/2 \approx 5.2\%$.)

We're studying math. Surely we get can a better answer than this! The answer I just gave is just fine for many situations, especially in engineering, but we'd like to know the exact answer if we can get it. Patience! The answer is coming.

Let's divvy up the interval into more than 4 sub-intervals - for grins let's do 10 subintervals. So the sum we need to compute now is

$$A \approx \frac{\pi}{10} \left( \sin(0)+ \sin(\pi/10)+ \sin(2\pi/10)+ \sin(3\pi/10)+ \dots + \sin(8\pi/10)+\sin(9\pi/10) \right).$$

You can see here that I've factored out the interval width, $\pi/10$, since all the intervals are the same width. You can always do that if the intervals are the same width. So, what do we get for this expression? You can plug this expression laboriously into your calculator, and come up with the decimal approximation $1.984$. That's closer, as it has the percent error $0.8\%$. Much better. But still not exact.

How do we get the exact number? Answer: by taking the limit as the number of rectangles goes to infinity. To do this conveniently, we need to introduce summation notation.

2.2 Summation Notation

No one wants to write out every term in a summation of the kind we've been talking about. It's cumbersome enough with only 10 terms. What if we had 100? Surely there's a way to write this summation in a compact form? Yes, there is. You use the capital Greek letter "sigma", written $\sum$, to express a summation. A summation typically has what's called a "dummy variable" whose value changes from one term in the summation to the next. Here's the way you write a summation:

$$\sum_{ \text{dummy variable}= \text{start}}^{ \text{finish}} \text{summand}.$$

As usual, the best way to illustrate is with examples. I'll start with an easy one:

$$\sum_{j=1}^{10}1=\underbrace{ \overbrace{1}^{j=1}+ \overbrace{1}^{j=2}+ \overbrace{1}^{j=3}+ \dots+ \overbrace{1}^{j=10}}_{10\; \text{times}}=10.$$

Here's a slightly harder one:

$$\sum_{j=1}^{10}j=\overbrace{1}^{j=1}+ \overbrace{2}^{j=2}+ \overbrace{3}^{j=3}+ \dots+ \overbrace{10}^{j=10}=55.$$

Interesting anecdote about this sum: Gauss supposedly figured out how to do this sum when he was a youngster and was punished, along with his class, by having to add up all the numbers from 1 to 1000. He reasoned that $1+1000=1001$, $2+999=1001$, $3+998=1001$, and so on. He paired a low number with a high number, and brought the values together. So how many pairs were there? $1000/2=500$. So the answer to the sum is $500*1001=500500$. That is,

$$\sum_{j=1}^{n}j=\frac{n(n+1)}{2}.$$

Homework: does this formula work when $n$ is odd?

Even harder:

$$\sum_{j=1}^{10}j^{2}=\overbrace{1}^{j=1}+ \overbrace{4}^{j=2}+ \overbrace{9}^{j=3}+ \dots+ \overbrace{100}^{j=10}=385.$$

There is a formula for this sum as well:

$$\sum_{j=1}^{n}j^{2}=\frac{n(n+1)(2n+1)}{6}.$$

You can prove this using mathematical induction.

Important facts about summation notation:

1. The dummy variable has no visibility outside the summation. Suppose I have an expression like this:

$$\sum_{j=1}^{10} \sin(j \pi)+\frac{e^{x}}{x}.$$

The $e^{x}/x$ doesn't know that the $j$ exists. So the scope of the $j$ is merely in the summation.

2. The exact identity of the dummy variable is unimportant. I could just as easily use $k$ as $j$:

$$\sum_{j=1}^{10}j=\sum_{k=1}^{10}k.$$

Remember: once I get outside the summation, no one knows about the dummy variable, so it doesn't matter what I use for dummy variables. This should make sense, since once I'm outside the summation, all I see are numbers, no dummy variable.

3. Summation is linear. This means two things:

$$\sum_{j=a}^{b}c\,f(j)=c\sum_{j=a}^{b}f(j),$$

and

$$\sum_{j=a}^{b}[f(j)+g(j)]=\sum_{j=a}^{b}f(j)+\sum_{j=a}^{b}g(j).$$

That this is true simply stems from the fact that addition is linear - then you prove this result using mathematical induction again.

2.3 Exact Value of Previous Area

Now that we are armed with summation notation, we can at least write our former sum compactly:

$$A\approx \frac{\pi}{10} \sum_{j=0}^{9} \sin(j\pi/10).$$

But we want more and more rectangles! What would this sum look like if we left the number of rectangles to be arbitrary? We'd get

$$A \approx \frac{\pi}{n} \sum_{j=0}^{n-1} \sin(j\pi/n).$$

How do you evaluate this sum? Well, if you look at the trig identity page on wiki, you'll see that there's a formula for exactly this sort of sum. That is, we have that

$$ \sum_{j=0}^{n} \sin( \varphi+j \alpha)= \frac{ \sin \left( \frac{(n+1) \alpha}{2} \right) \cdot \sin \left( \varphi+ \frac{n \alpha}{2} \right)}{ \sin( \alpha/2)}.$$

Whew! In our case, we can simplify this a bit. Comparing these two expressions, we see right away that $\varphi=0$. That leaves us with

$$ \sum_{j=0}^{n} \sin(j \alpha)= \frac{ \sin \left( \frac{(n+1) \alpha}{2} \right) \cdot \sin \left( \frac{n \alpha}{2} \right)}{ \sin( \alpha/2)}.$$

Now we don't want this sum to go all the way to $n$, but to $n-1$. So, just replace as follows:

$$ \sum_{j=0}^{n-1} \sin(j \alpha)= \frac{ \sin \left( \frac{n \alpha}{2} \right) \cdot \sin \left( \frac{(n-1) \alpha}{2} \right)}{ \sin( \alpha/2)}.$$

Finally, we see that we need $\alpha=\pi/n$. So, putting that in our expression yields

$$ \sum_{j=0}^{n-1} \sin(j \pi/n)=

\frac{ \sin \left( \frac{n ( \pi ) } { 2n } \right) \cdot \sin \left( \frac { (n-1) \pi } { 2n } \right) }

{ \sin( \pi / ( 2n ) ) }=

\frac{ \sin \left( \frac{ \pi } { 2 } \right) \cdot \sin \left( \frac { (n-1) \pi } { 2n } \right) }

{ \sin( \pi / ( 2n ) ) }=

\frac{ \sin \left( \pi / 2 - \pi / (2n) \right) } { \sin( \pi / ( 2n ) ) }.$$

We can use the addition of angle formula for the numerator to obtain

$$ \sum_{j=0}^{n-1} \sin(j \pi/n) = \frac{ \sin ( \pi/2 ) \cos( \pi/(2n)) - \sin(\pi/(2n)) \cos( \pi/2) } { \sin( \pi / ( 2n ) ) } = \frac{ \cos( \pi/(2n)) } { \sin( \pi / ( 2n ) ) }.$$

Yes, I could write the cotangent here, but I'm going to leave it where it is. So, to recap:

$$ \frac{ \pi}{n} \sum_{j=0}^{n-1} \sin(j\pi/n) = \frac{ \pi}{n} \frac{ \cos( \pi/(2n)) } { \sin( \pi / ( 2n ) ) }.$$

What we really want to do is compute the limit:

$$\lim_{n\to \infty} \frac{ \pi}{n} \sum_{j=0}^{n-1} \sin(j\pi/n) = \lim_{n\to \infty}\frac{ \pi}{n} \frac{ \cos( \pi/(2n)) } { \sin( \pi / ( 2n ) ) }.$$

So, what's going on in this limit is that the number of rectangles is going to infinity, and they are getting really small in width. Really small! We say they are getting infinitesimally small. Can we compute this limit? I think we can. Recall that

$$\lim_{x\to 0}\frac{\sin(x)}{x}=1.$$

Here's the dirty trick: I say that taking a limit as $n\to\infty$ is the same as saying that $(1/n)\to 0$. So, let's make the substitution $x=1/n$, and re-evaluate:

$$\lim_{n\to \infty}\frac{ \pi}{n} \frac{ \cos( \pi/(2n)) } { \sin( \pi / ( 2n ) ) }=

\lim_{x\to 0}(x\pi) \frac{ \cos( x \pi/2) } { \sin( x \pi / 2 ) }.$$

Quick, while no one's looking, I'm going to multiply and divide by $2$, thus:

$$=2\lim_{x\to 0}\frac{x\pi}{2} \frac{ \cos( x \pi/2) } { \sin( x \pi / 2 ) }.$$

Now I'm going to break this limit up into two pieces by using my product rule for limits:

$$=2\lim_{x\to 0}\frac{x\pi/2}{\sin(x\pi/2)}\cdot\lim_{x\to 0}\cos(x\pi/2).$$

If $x\to 0$, then surely $x\pi/2 \to 0$. And I can use quotient rules for limits to achieve

$$=2\frac{1}{\lim_{x\pi/2\to 0}\frac{\sin(x\pi/2)}{x\pi/2}}\cdot 1=2\cdot 1=2.$$

And there it is! The exact answer. No sweat, right? (I hope you were sweating through all that, actually, because it helps you to realize just how difficult a problem it is to find exact areas.)

In order to study integral calculus, you must have a firm grasp of differential calculus. You can go to

http://mathhelpboards.com/calculus-10/differential-calculus-tutorial-1393.html

for my differential calculus tutorial, although, of course, there are many books and other tutorials available as well. Note that the prerequisites I listed in my tutorial for differential calculus still apply. That is, integral calculus builds on and assumes differential calculus and all its prerequisites. So you still need your http://www.mathhelpboards.com/f2/algebra-dos-donts-1385/, geometry, and http://www.mathhelpboards.com/f12/trigonometry-memorize-trigonometry-derive-35/.

I already did an overview of integral calculus in the differential calculus tutorial, so I won't repeat it here. We'll just dive right in.

2. Area Under a Curve

From geometry, you should know how to compute areas of circles, rectangles, trapezoids, and other simple figures. But what is the area under the sine curve from $0$ to $\pi$? You won't learn that in geometry. The answer happens to be $2$. How did I get that?

2.1 Area Approximations

You can approximate the area under a curve by drawing a whole bunch of rectangles and adding up those areas. Let me illustrate: suppose we take our function $y=\sin(x)$, and take the interval $[0,\pi]$ and divide it up into four equal width sections. So we have $[0,\pi/4), [\pi/4,\pi/2), [\pi/2,3\pi/4),$ and $[3\pi/4,\pi]$. For each of these intervals, we draw a rectangle whose width is the width of the interval ($\pi/4$ for all four smaller intervals), and whose height is determined by the height of our function at the left-hand end of the interval. Here's a picture to illustrate:

https://www.physicsforums.com/attachments/322._xfImport

The left-most rectangle has no height because $\sin(0)=0$. So, what is the area of all those boxes? Well, we have a rather simple computation to make:

$$A\approx \sin(0)*( \pi/4)+ \sin( \pi/4)*( \pi/4)+ \sin( \pi/2)*( \pi/4)+ \sin(3 \pi/4)*( \pi/4)$$

$$=( \pi/4) \left(0+ \frac{\sqrt{2}}{2}+1+ \frac{\sqrt{2}}{2} \right)$$

$$= \frac{ \pi}{4} (1+\sqrt{2})$$

$$ \approx 1.896.$$

Not too bad (the percent error is $100 \% \cdot(2-1.896)/2 \approx 5.2\%$.)

We're studying math. Surely we get can a better answer than this! The answer I just gave is just fine for many situations, especially in engineering, but we'd like to know the exact answer if we can get it. Patience! The answer is coming.

Let's divvy up the interval into more than 4 sub-intervals - for grins let's do 10 subintervals. So the sum we need to compute now is

$$A \approx \frac{\pi}{10} \left( \sin(0)+ \sin(\pi/10)+ \sin(2\pi/10)+ \sin(3\pi/10)+ \dots + \sin(8\pi/10)+\sin(9\pi/10) \right).$$

You can see here that I've factored out the interval width, $\pi/10$, since all the intervals are the same width. You can always do that if the intervals are the same width. So, what do we get for this expression? You can plug this expression laboriously into your calculator, and come up with the decimal approximation $1.984$. That's closer, as it has the percent error $0.8\%$. Much better. But still not exact.

How do we get the exact number? Answer: by taking the limit as the number of rectangles goes to infinity. To do this conveniently, we need to introduce summation notation.

2.2 Summation Notation

No one wants to write out every term in a summation of the kind we've been talking about. It's cumbersome enough with only 10 terms. What if we had 100? Surely there's a way to write this summation in a compact form? Yes, there is. You use the capital Greek letter "sigma", written $\sum$, to express a summation. A summation typically has what's called a "dummy variable" whose value changes from one term in the summation to the next. Here's the way you write a summation:

$$\sum_{ \text{dummy variable}= \text{start}}^{ \text{finish}} \text{summand}.$$

As usual, the best way to illustrate is with examples. I'll start with an easy one:

$$\sum_{j=1}^{10}1=\underbrace{ \overbrace{1}^{j=1}+ \overbrace{1}^{j=2}+ \overbrace{1}^{j=3}+ \dots+ \overbrace{1}^{j=10}}_{10\; \text{times}}=10.$$

Here's a slightly harder one:

$$\sum_{j=1}^{10}j=\overbrace{1}^{j=1}+ \overbrace{2}^{j=2}+ \overbrace{3}^{j=3}+ \dots+ \overbrace{10}^{j=10}=55.$$

Interesting anecdote about this sum: Gauss supposedly figured out how to do this sum when he was a youngster and was punished, along with his class, by having to add up all the numbers from 1 to 1000. He reasoned that $1+1000=1001$, $2+999=1001$, $3+998=1001$, and so on. He paired a low number with a high number, and brought the values together. So how many pairs were there? $1000/2=500$. So the answer to the sum is $500*1001=500500$. That is,

$$\sum_{j=1}^{n}j=\frac{n(n+1)}{2}.$$

Homework: does this formula work when $n$ is odd?

Even harder:

$$\sum_{j=1}^{10}j^{2}=\overbrace{1}^{j=1}+ \overbrace{4}^{j=2}+ \overbrace{9}^{j=3}+ \dots+ \overbrace{100}^{j=10}=385.$$

There is a formula for this sum as well:

$$\sum_{j=1}^{n}j^{2}=\frac{n(n+1)(2n+1)}{6}.$$

You can prove this using mathematical induction.

Important facts about summation notation:

1. The dummy variable has no visibility outside the summation. Suppose I have an expression like this:

$$\sum_{j=1}^{10} \sin(j \pi)+\frac{e^{x}}{x}.$$

The $e^{x}/x$ doesn't know that the $j$ exists. So the scope of the $j$ is merely in the summation.

2. The exact identity of the dummy variable is unimportant. I could just as easily use $k$ as $j$:

$$\sum_{j=1}^{10}j=\sum_{k=1}^{10}k.$$

Remember: once I get outside the summation, no one knows about the dummy variable, so it doesn't matter what I use for dummy variables. This should make sense, since once I'm outside the summation, all I see are numbers, no dummy variable.

3. Summation is linear. This means two things:

$$\sum_{j=a}^{b}c\,f(j)=c\sum_{j=a}^{b}f(j),$$

and

$$\sum_{j=a}^{b}[f(j)+g(j)]=\sum_{j=a}^{b}f(j)+\sum_{j=a}^{b}g(j).$$

That this is true simply stems from the fact that addition is linear - then you prove this result using mathematical induction again.

2.3 Exact Value of Previous Area

Now that we are armed with summation notation, we can at least write our former sum compactly:

$$A\approx \frac{\pi}{10} \sum_{j=0}^{9} \sin(j\pi/10).$$

But we want more and more rectangles! What would this sum look like if we left the number of rectangles to be arbitrary? We'd get

$$A \approx \frac{\pi}{n} \sum_{j=0}^{n-1} \sin(j\pi/n).$$

How do you evaluate this sum? Well, if you look at the trig identity page on wiki, you'll see that there's a formula for exactly this sort of sum. That is, we have that

$$ \sum_{j=0}^{n} \sin( \varphi+j \alpha)= \frac{ \sin \left( \frac{(n+1) \alpha}{2} \right) \cdot \sin \left( \varphi+ \frac{n \alpha}{2} \right)}{ \sin( \alpha/2)}.$$

Whew! In our case, we can simplify this a bit. Comparing these two expressions, we see right away that $\varphi=0$. That leaves us with

$$ \sum_{j=0}^{n} \sin(j \alpha)= \frac{ \sin \left( \frac{(n+1) \alpha}{2} \right) \cdot \sin \left( \frac{n \alpha}{2} \right)}{ \sin( \alpha/2)}.$$

Now we don't want this sum to go all the way to $n$, but to $n-1$. So, just replace as follows:

$$ \sum_{j=0}^{n-1} \sin(j \alpha)= \frac{ \sin \left( \frac{n \alpha}{2} \right) \cdot \sin \left( \frac{(n-1) \alpha}{2} \right)}{ \sin( \alpha/2)}.$$

Finally, we see that we need $\alpha=\pi/n$. So, putting that in our expression yields

$$ \sum_{j=0}^{n-1} \sin(j \pi/n)=

\frac{ \sin \left( \frac{n ( \pi ) } { 2n } \right) \cdot \sin \left( \frac { (n-1) \pi } { 2n } \right) }

{ \sin( \pi / ( 2n ) ) }=

\frac{ \sin \left( \frac{ \pi } { 2 } \right) \cdot \sin \left( \frac { (n-1) \pi } { 2n } \right) }

{ \sin( \pi / ( 2n ) ) }=

\frac{ \sin \left( \pi / 2 - \pi / (2n) \right) } { \sin( \pi / ( 2n ) ) }.$$

We can use the addition of angle formula for the numerator to obtain

$$ \sum_{j=0}^{n-1} \sin(j \pi/n) = \frac{ \sin ( \pi/2 ) \cos( \pi/(2n)) - \sin(\pi/(2n)) \cos( \pi/2) } { \sin( \pi / ( 2n ) ) } = \frac{ \cos( \pi/(2n)) } { \sin( \pi / ( 2n ) ) }.$$

Yes, I could write the cotangent here, but I'm going to leave it where it is. So, to recap:

$$ \frac{ \pi}{n} \sum_{j=0}^{n-1} \sin(j\pi/n) = \frac{ \pi}{n} \frac{ \cos( \pi/(2n)) } { \sin( \pi / ( 2n ) ) }.$$

What we really want to do is compute the limit:

$$\lim_{n\to \infty} \frac{ \pi}{n} \sum_{j=0}^{n-1} \sin(j\pi/n) = \lim_{n\to \infty}\frac{ \pi}{n} \frac{ \cos( \pi/(2n)) } { \sin( \pi / ( 2n ) ) }.$$

So, what's going on in this limit is that the number of rectangles is going to infinity, and they are getting really small in width. Really small! We say they are getting infinitesimally small. Can we compute this limit? I think we can. Recall that

$$\lim_{x\to 0}\frac{\sin(x)}{x}=1.$$

Here's the dirty trick: I say that taking a limit as $n\to\infty$ is the same as saying that $(1/n)\to 0$. So, let's make the substitution $x=1/n$, and re-evaluate:

$$\lim_{n\to \infty}\frac{ \pi}{n} \frac{ \cos( \pi/(2n)) } { \sin( \pi / ( 2n ) ) }=

\lim_{x\to 0}(x\pi) \frac{ \cos( x \pi/2) } { \sin( x \pi / 2 ) }.$$

Quick, while no one's looking, I'm going to multiply and divide by $2$, thus:

$$=2\lim_{x\to 0}\frac{x\pi}{2} \frac{ \cos( x \pi/2) } { \sin( x \pi / 2 ) }.$$

Now I'm going to break this limit up into two pieces by using my product rule for limits:

$$=2\lim_{x\to 0}\frac{x\pi/2}{\sin(x\pi/2)}\cdot\lim_{x\to 0}\cos(x\pi/2).$$

If $x\to 0$, then surely $x\pi/2 \to 0$. And I can use quotient rules for limits to achieve

$$=2\frac{1}{\lim_{x\pi/2\to 0}\frac{\sin(x\pi/2)}{x\pi/2}}\cdot 1=2\cdot 1=2.$$

And there it is! The exact answer. No sweat, right? (I hope you were sweating through all that, actually, because it helps you to realize just how difficult a problem it is to find exact areas.)

Attachments

Last edited: