- #1

Tone L

- 73

- 7

- TL;DR Summary

- Why doesn't normalized data represent percent change? How can I quantify normalized data?

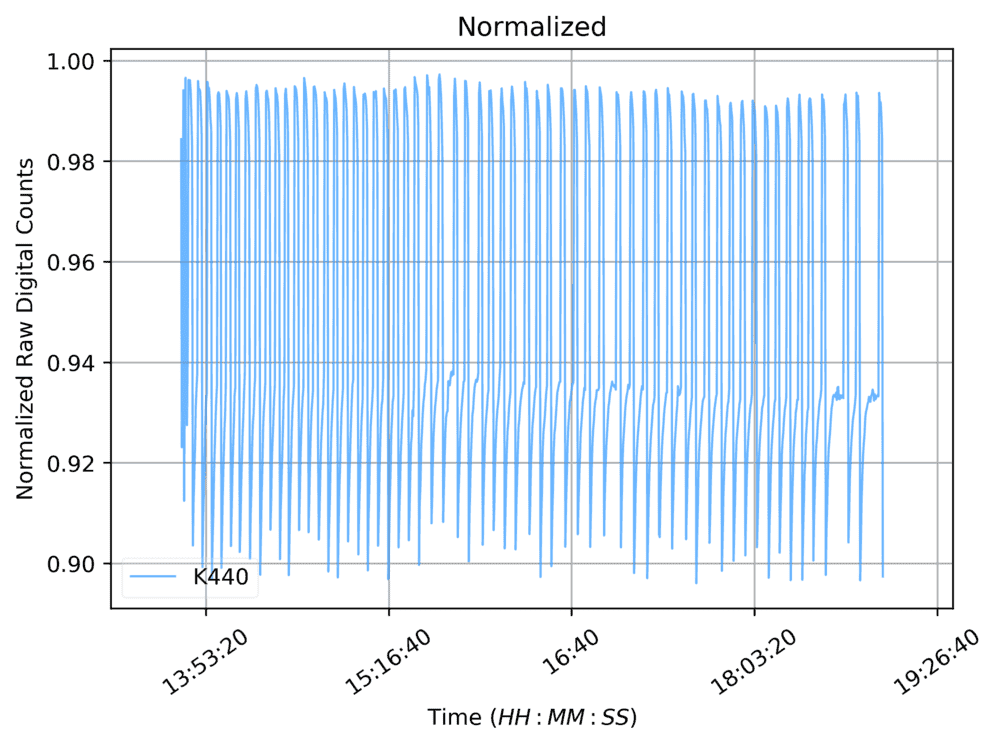

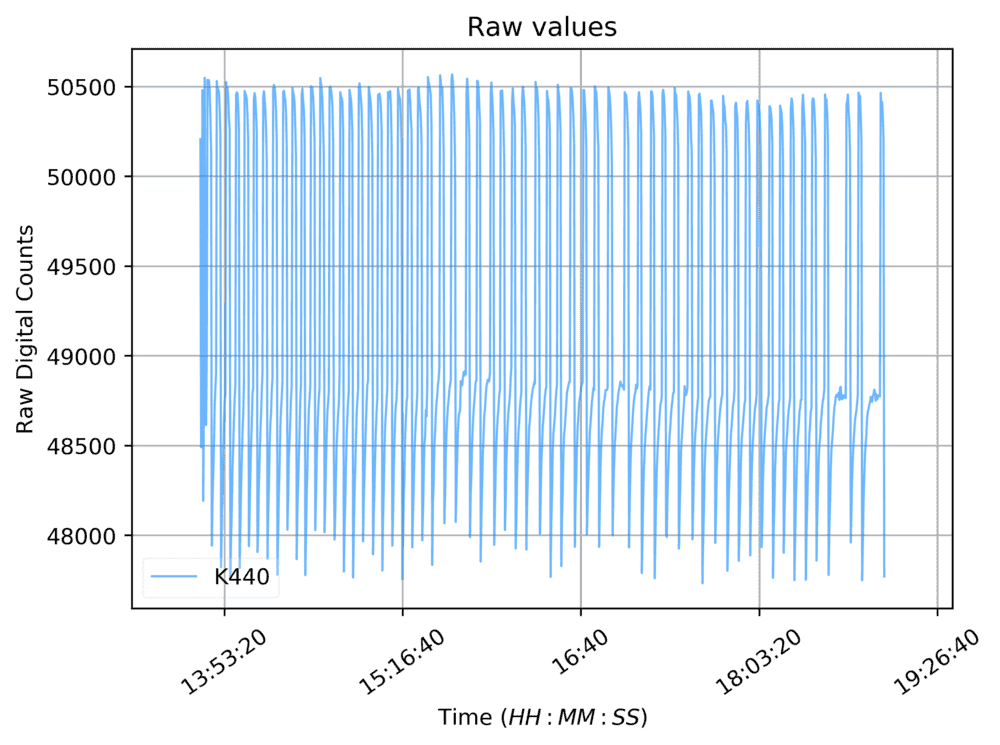

I have been working with some time series data of spectral signals, each wavelength has a different signal, so I normalize the data so I can plot it effectively. However, I am struggling to quantify the new normalized data. I will give an example below.

Normalizing data often refers to re-scaling by the minimum and range of the variables, to make all the elements lie between 0 and 1 thus bringing all the values of numeric columns in the data set to a common scale.

The equation is defined as, $$x={x_i−x_{min}}{x_{max}−x_{min}}$$, now this will represent a new list of values from 0 to 1.

Now to illustrate my confusion I will present an example, of just one wavelength. At t0 the corresponding normalized value is 0.987 and at ##t_{10}## the value is 0.927.

At t0 the spectral value is 50482 and ##t_{10}## it is 50415. Using the spectral values, the percent difference between t0 and ##t_{10}## is: 0.13% and for the normalized data it is 6.07%.

When I plot the results they visually are identical... Normalizing doesn't re-scale the values, it completely changes them, but visually they look the same. Thus, my confusion. Why is the percent change not equal to one another, thanks a lot guys!

Normalizing data often refers to re-scaling by the minimum and range of the variables, to make all the elements lie between 0 and 1 thus bringing all the values of numeric columns in the data set to a common scale.

The equation is defined as, $$x={x_i−x_{min}}{x_{max}−x_{min}}$$, now this will represent a new list of values from 0 to 1.

Now to illustrate my confusion I will present an example, of just one wavelength. At t0 the corresponding normalized value is 0.987 and at ##t_{10}## the value is 0.927.

At t0 the spectral value is 50482 and ##t_{10}## it is 50415. Using the spectral values, the percent difference between t0 and ##t_{10}## is: 0.13% and for the normalized data it is 6.07%.

When I plot the results they visually are identical... Normalizing doesn't re-scale the values, it completely changes them, but visually they look the same. Thus, my confusion. Why is the percent change not equal to one another, thanks a lot guys!

Last edited: