- #1

Felipe Lincoln

Gold Member

- 99

- 11

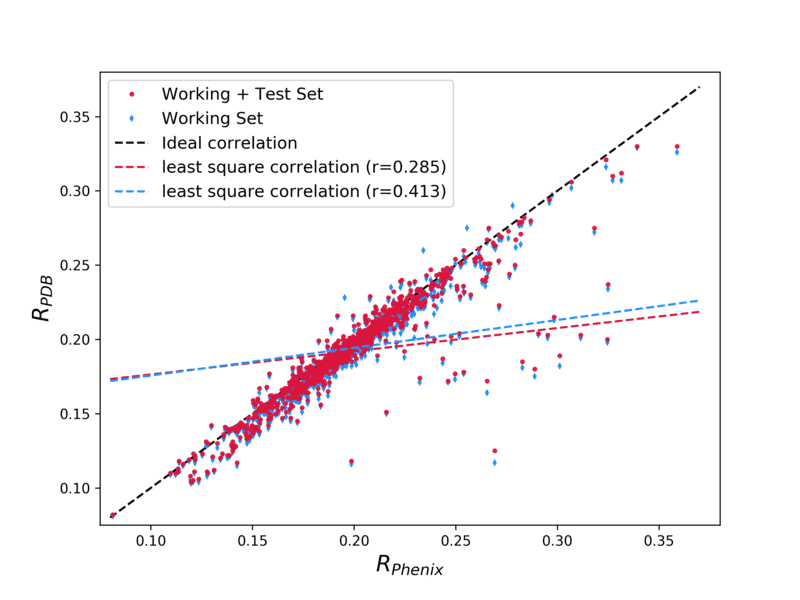

I'm analysing some data and my task is to get a line that best fits the data, using least square I'm getting these dashed curves (red and blue) with low correlation factors. Is there another method that takes into consideration the amount of data placed into the direction of a line?