- #1

lyd123

- 13

- 0

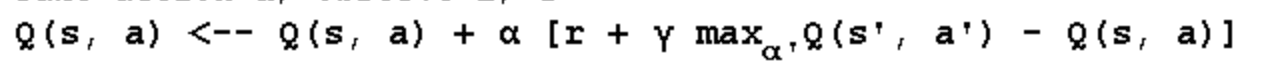

Hi, I'm learning Reinforcement Learning, and computing q values is challenging.

I'm not sure if the question wants me to follow this formula since I'm not given the learning rate \( \alpha \), and also because Q- Learning doesn't need a transition matrix. I'm really not sure where to begin. Thanks for any help.

I'm not sure if the question wants me to follow this formula since I'm not given the learning rate \( \alpha \), and also because Q- Learning doesn't need a transition matrix. I'm really not sure where to begin. Thanks for any help.