- #1

jarekduda

- 82

- 5

Shor's algorithm is rather the most interesting quantum algorithm as it shifts a problem which is believed to need exponential classical time, to polynomial time for quantum computer, additionally endangering asymmetric encryption like RSA and ECC.

The real question is if there are other problems, beside factorization and similar discrete logarithm problem, which can be shifted from exponential to polynomial time thanks to quantum computers? Are there known such other examples?

It is generally clear that to design a quantum algorithm, we should start with Hadamar gates, perform some unitary evolution, then measurement. However, personally for me the unitary part is a bit too abstract for direct algorithmic thinking - Shor grasps what seems to be a general and powerful mechanism, which might be possible to exploit also for essentially different problems.

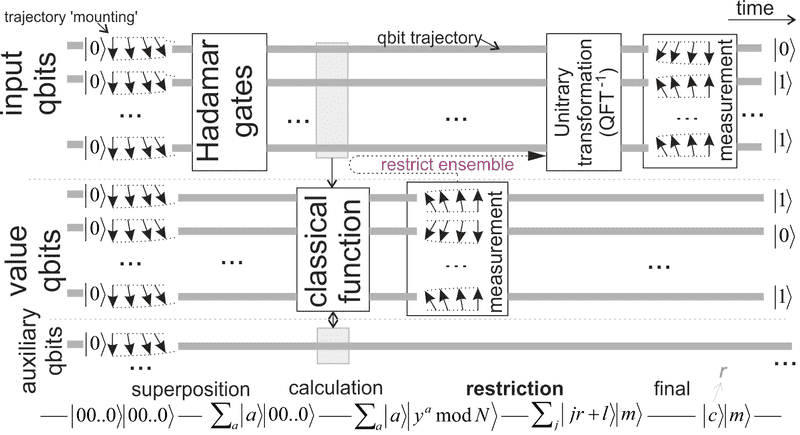

So I am looking for quantum algorithms exploiting QM in a similar way as Shor - here is a diagram for its quantum subroutine:

Beside periodicity with QFT, what other information could we extract from such restricted ensemble? Asking if its size is larger than one is simple - any other questions we can perform?

Any thoughts? Interesting quantum algorithms beside Deutsch, Grover and Shor?

The real question is if there are other problems, beside factorization and similar discrete logarithm problem, which can be shifted from exponential to polynomial time thanks to quantum computers? Are there known such other examples?

It is generally clear that to design a quantum algorithm, we should start with Hadamar gates, perform some unitary evolution, then measurement. However, personally for me the unitary part is a bit too abstract for direct algorithmic thinking - Shor grasps what seems to be a general and powerful mechanism, which might be possible to exploit also for essentially different problems.

So I am looking for quantum algorithms exploiting QM in a similar way as Shor - here is a diagram for its quantum subroutine:

- The Hadamar gates create superposition of all (exponential number) values for input qubits.

- Then we perform a classical function on them, which is here: "f(a) = y^a mod N", where N is the number we would like to factorize, y is some (usually random) number smaller than N.

- Then we perform measurement of value of this function f(a)=m, which is random, but this measurement restricts the original ensemble to only input values a, such that f(a)=m.

- Mathematics says that this restricted ensemble has to be periodic, this period can be concluded from value of (quantum) Fourier transform, and allows to conclude the factors.

- use Hadamar gates to get superposition of all inputs,

- perform some classical function f,

- measure value of this function m, restricting the original ensemble to f(a)=m,

- extract some information from the final restricted ensemble.

Beside periodicity with QFT, what other information could we extract from such restricted ensemble? Asking if its size is larger than one is simple - any other questions we can perform?

Any thoughts? Interesting quantum algorithms beside Deutsch, Grover and Shor?