- #1

thaiqi

- 160

- 8

- TL;DR Summary

- wondering about a proof of exchange between variation sign and integral sign

Hello, everyone.

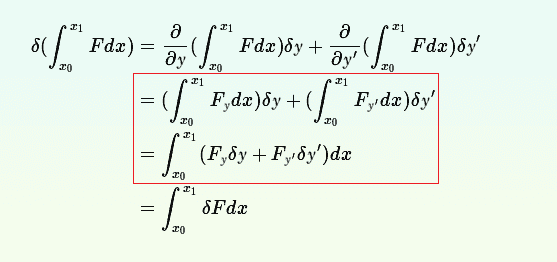

I know that it is feasible to exchange the order of one variation sign and one integral sign. But there gives a proof of this in one book. I wonder about a step in it. As below marked in the red

rectangle:

How can ##\delta y## and ##\delta y^\prime## be moved into the integral sign? Aren't they functions of ## x ## ?

I know that it is feasible to exchange the order of one variation sign and one integral sign. But there gives a proof of this in one book. I wonder about a step in it. As below marked in the red

rectangle:

How can ##\delta y## and ##\delta y^\prime## be moved into the integral sign? Aren't they functions of ## x ## ?