- #1

SlurrerOfSpeech

- 141

- 11

So I created my own implementation of a hash set of strings and am XORing the characters as a hashing function:

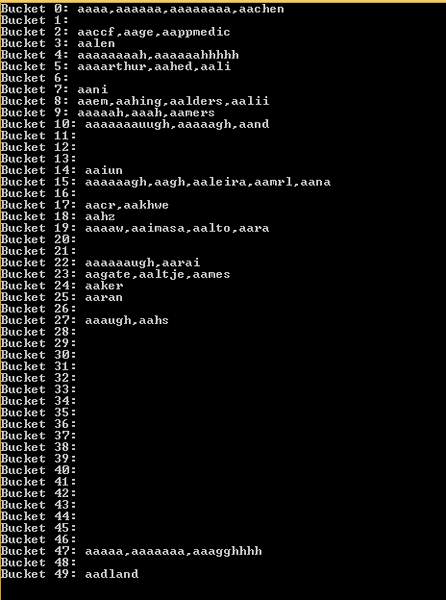

Problem is that it's not doing a good job of distributing N strings into N buckets. For instance, I initialize it with 50 buckets and add in the first 50 words of http://www.math.sjsu.edu/~foster/dictionary.txt and it ends up like

Now I know that's not the best example since the first 50 words (alphabetized) are very similar in characters, but I've tested it out with random strings and it does the same thing.

Code:

private int _GetBucketNumber ( string s, List<List<string>> Buckets )

{

// s: string whose index to look up

// Buckets: source buckets

// disallow empty or NULL strings

if ( String.IsNullOrEmpty(s) ) { throw new ArgumentException("Cannot add empty or NULL string to set"); }

if ( Buckets.Count == 0 ) { throw new ArgumentException("Tried to call _GetBucketNumber on empty bucket list"); }

// XOR characters together and mod by length of buckets

char c = s[0];

for ( int i = 1; i < s.Length; ++i ) { c ^= s[i]; }

return (int)c % Buckets.Count;

}Problem is that it's not doing a good job of distributing N strings into N buckets. For instance, I initialize it with 50 buckets and add in the first 50 words of http://www.math.sjsu.edu/~foster/dictionary.txt and it ends up like

Now I know that's not the best example since the first 50 words (alphabetized) are very similar in characters, but I've tested it out with random strings and it does the same thing.

Last edited by a moderator: