- #1

evinda

Gold Member

MHB

- 3,836

- 0

Hello! (Wave)

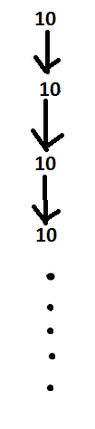

I want to show that the $T(n)$ of the following algorithm is equal to $O(\log n)$.

How can we do it, now that we have if, else conditions? (Thinking)

Also, how can we find how many memory is required, so that the algorithm is executed?

I want to show that the $T(n)$ of the following algorithm is equal to $O(\log n)$.

How can we do it, now that we have if, else conditions? (Thinking)

Code:

int BinarySearch(int A[1…n], int y, int low, int high) {

if (high < low) {

return -1; //not found

}

mid = low + (high - low) / 2;

if (A[mid] > y) {

return BinarySearch(A, y, low, mid-1);

}

else if (A[mid] < y) {

return BinarySearch(A, y, mid+1, high);

}

else {

return mid; //found

}

}Also, how can we find how many memory is required, so that the algorithm is executed?