- #1

Math Amateur

Gold Member

MHB

- 3,990

- 48

I am reading Paul E. Bland's book "Rings and Their Modules ...

Currently I am focused on Section 2.2 Free Modules ... ...

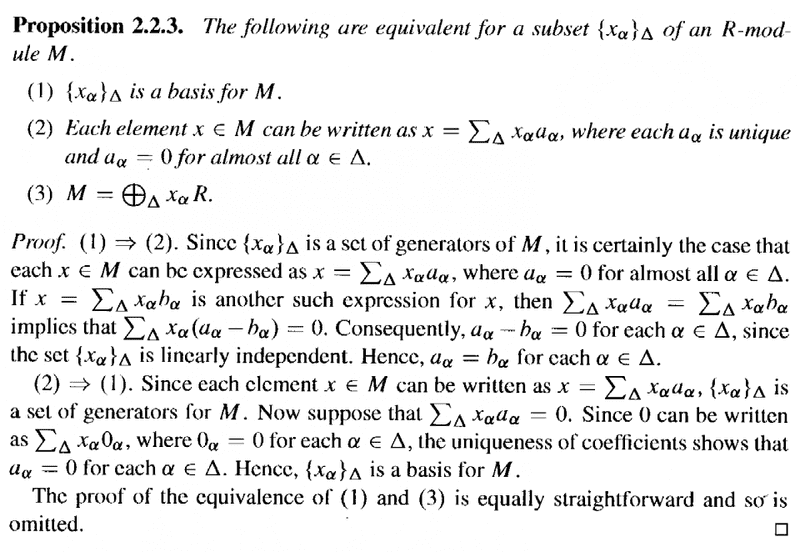

I need some help in order to fully understand the proof of the equivalence of (1) and (3) in Proposition 2.2.3 ...

Proposition 2.2.3 and its proof reads as follows:

Bland omits the proof of the equivalence of (1) and (3) ...

Can someone please help me to get started on a rigorous proof of the equivalence of (1) and (3) ... especially covering the case where [itex]\Delta[/itex] is an unaccountably infinite set ...

Peter

Currently I am focused on Section 2.2 Free Modules ... ...

I need some help in order to fully understand the proof of the equivalence of (1) and (3) in Proposition 2.2.3 ...

Proposition 2.2.3 and its proof reads as follows:

Bland omits the proof of the equivalence of (1) and (3) ...

Can someone please help me to get started on a rigorous proof of the equivalence of (1) and (3) ... especially covering the case where [itex]\Delta[/itex] is an unaccountably infinite set ...

Peter