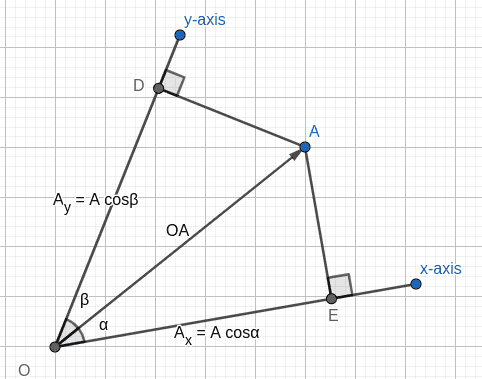

Yes, nice! Your diagram and parallelogram constructions are perfect, and your equations look correct. I'll explain the more general theory, to show how to deal with non-orthogonal bases more easily. There's going to be a few new concepts here, so keep your wits about you...

First let's just consider a 3-dimensional Euclidean vector space ##V##, where we have access to an inner product and a cross product, and let's choose a basis ##(\mathbf{e}_x, \mathbf{e}_y, \mathbf{e}_z)## which is not necessarily orthogonal or even normalised. The arbitrary vector ##\mathbf{A}## can always be written as a unique linear combination of the basis vectors$$\mathbf{A} = a_x \mathbf{e}_x + a_y \mathbf{e}_y + a_z \mathbf{e}_z$$Now, let's introduce the vectors ##{\mathbf{e}_x}^*, {\mathbf{e}_y}^*, {\mathbf{e}_z}^*##, defined by

$${\mathbf{e}_x}^* = \frac{\mathbf{e}_y \times \mathbf{e}_z}{\mathbf{e}_x \cdot \mathbf{e}_y \times \mathbf{e}_z}, \quad {\mathbf{e}_y}^* = \frac{\mathbf{e}_z \times \mathbf{e}_x}{\mathbf{e}_x \cdot \mathbf{e}_y \times \mathbf{e}_z}, \quad

{\mathbf{e}_z}^* = \frac{\mathbf{e}_x \times \mathbf{e}_y}{\mathbf{e}_x \cdot \mathbf{e}_y \times \mathbf{e}_z}$$where ##\mathbf{e}_x \cdot \mathbf{e}_y \times \mathbf{e}_z## is the scalar triple product of the original basis vectors. These strange new starred vectors we've defined actually help us to very easily determine the numbers ##a_x##, ##a_y## and ##a_z##; simply, take the inner product of ##\mathbf{A}## with the corresponding starred vector, e.g.

$$\mathbf{A} \cdot {\mathbf{e}_x}^* =

a_x \underbrace{\frac{\mathbf{e}_x \cdot \mathbf{e}_y \times \mathbf{e}_z}{\mathbf{e}_x \cdot \mathbf{e}_y \times \mathbf{e}_z}}_{=1} +

a_y \underbrace{\frac{\mathbf{e}_y \cdot \mathbf{e}_y \times \mathbf{e}_z}{\mathbf{e}_x \cdot \mathbf{e}_y \times \mathbf{e}_z}}_{=0} +

a_z \underbrace{\frac{\mathbf{e}_z \cdot \mathbf{e}_y \times \mathbf{e}_z}{\mathbf{e}_x \cdot \mathbf{e}_y \times \mathbf{e}_z}}_{=0} = a_x$$due to the property that the scalar triple product of three vectors vanishes if any two of them are the same. Now, it turns out that if the basis ##(\mathbf{e}_x, \mathbf{e}_y, \mathbf{e}_z)## is orthonormal, then ##\mathbf{e}_i = {\mathbf{e}_i}^*## and you can simply obtain the components ##a_i## by taking the scalar product with the original basis vectors ##\mathbf{e}_i## as you are likely used to. But in the general case ##\mathbf{e}_i \neq {\mathbf{e}_i}^*##, and you must be more careful.

-------------------

Maybe you are satisfied with that, but if you're not put off then there's some more hiding under the surface! Consider an ##n##-dimensional vector space ##V## and suppose we've chosen a general basis ##\beta = (\mathbf{e}_1, \dots, \mathbf{e}_n)##. Now, consider an arbitrary vector ##\mathbf{v} \in V##; again, by the theory of linear algebra it's possible to write ##\mathbf{v}## as a unique linear combination of the basis,

$$\mathbf{v} = v^1 \mathbf{e}_1 + \dots + v^n \mathbf{e}_n = \sum_{i=1}^n v^i \mathbf{e}_i$$It's very important to note that the superscripts, e.g. ##v^2##, do not represent exponentiation here, they are just labels; why we have chosen

superscripts will hopefully become clear soon. To the vector space ##V## you may define the

dual space ##V^*##, which is just a space of objects ##\boldsymbol{\omega} \in V^*## which

act linearly on vectors to give

real numbers, for example ##\boldsymbol{\omega}(\mathbf{v}) = c \in \mathbb{R}##. ##V^*## itself is a vector space, and we are free to choose a basis for it. It turns out that we can define quite naturally a basis ##\beta^* = (\mathbf{f}^1, \dots, \mathbf{f}^n)## of ##V^*## associated to our basis ##\beta = (\mathbf{e}_1, \dots, \mathbf{e}_n)## of ##V## by the rule$$\mathbf{f}^i(\mathbf{e}_j) = \delta^i_j$$where ##\delta^i_j## is ##1## if ##i=j## and ##0## if ##i \neq j##. And of course, then we can write any element ##\boldsymbol{\omega} \in V^*## as a linear combination of the ##\beta^*## basis,$$\boldsymbol{\omega} = \omega_1 \mathbf{f}^1 + \dots + \omega_n \mathbf{f}^n = \sum_{i=1}^n \omega_i \mathbf{f}^i$$

[exercise: prove linear independence of the ##\mathbf{f}^i##, and show that the action of both sides on an arbitrary vector ##\mathbf{v}## are in agreement!].

Anyway, we don't want to go too far into the properties of the dual space, instead we just want to know how to find the components ##v^i## of ##\mathbf{v} \in V##! Luckily, with this machinery in place, it's quite straightforward. Consider acting the ##i^{\mathrm{th}}## basis vector of ##\beta^*##, ##\mathbf{f}^i##, on ##\mathbf{v}## and using the linearity:$$\mathbf{f}^i(\mathbf{v}) = \mathbf{f}^i \left(\sum_{j=1}^n v^j \mathbf{e}_j \right) = \sum_{j=1}^n v^j \mathbf{f}^i(\mathbf{e}_j) = \sum_{j=1}^n v^j \delta^i_j = v^i$$in other words, acting the associated

dual basis vector on ##\mathbf{v}## yields the relevant component.

You might be wondering, then, how do the ##{\mathbf{e}_i}^* \in V## we introduced right back at the beginning relate to the ##\mathbf{f}^i \in V^*## in the dual space? Both of them "act" on vectors, and give back the ##i^{\mathrm{th}}## component of the vector, except in the first case the ##{\mathbf{e}_i}^*## live in an inner-product space ##V## and act via the inner product, whilst in the second case the ##\mathbf{f}^i## live in ##V^*## and act as functionals.

The answer is that if ##V## is an inner-product space, the ##{\mathbf{e}_i}^*## can be defined and in particular they may be defined by the Riesz representation theorem. This states that given any arbitrary vector ##\mathbf{u} \in V##, we may associate by isomorphism some ##\boldsymbol{\omega}_{\mathbf{u}} \in V^*## such that for any ##\mathbf{v} \in V##,$$\boldsymbol{\omega}_{\mathbf{u}}(\mathbf{v}) = \mathbf{u} \cdot \mathbf{v}$$In other words, "##\boldsymbol{\omega}_u## acts on ##\mathbf{v}## just like ##\mathbf{u}## acts on ##\mathbf{v}##". So there is naturally a bijective map ##\varphi: V \rightarrow V^*## which takes ##\mathbf{u} \mapsto \boldsymbol{\omega}_{\mathbf{u}}##. And with this theory in mind, maybe you have now noticed that$$\mathbf{f}^i = \varphi({\mathbf{e}_i}^*)$$i.e. that ##\mathbf{f}^i## is the so-called

dual of ##{\mathbf{e}_i}^*##.

[It should be noted that a vector space ##V## is not necessarily an inner-product space, and in such cases you can

only work with the ##\mathbf{f}^i## and

cannot define the ##{\mathbf{e}_i}^*##.]