pkennedy

- 4

- 0

I'm trying to decide on a method for calculating a weighted mean for my data.

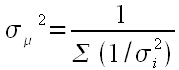

In Bevington's Data Reduction and Error Analysis for the Physical Sciences 2nd Ed. Equation 4.19, the variance of the weighted mean is:

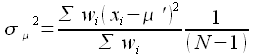

However, Bevington also suggests the use of Equation 4.22 substituted into 4.23 to calculate the variance of the weighted mean:

These two formula are not equivalent (even if weight is defined as the square of the inverse standard deviation).

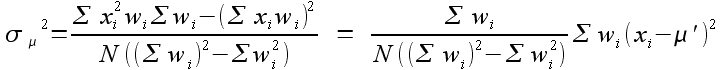

To muddy the waters even further, a coworker has suggested the following variance calculation:

Could someone explain why these formulae are different when they are all used to calculate the standard deviation of the weighted mean? Do they serve different purposes?

In Bevington's Data Reduction and Error Analysis for the Physical Sciences 2nd Ed. Equation 4.19, the variance of the weighted mean is:

However, Bevington also suggests the use of Equation 4.22 substituted into 4.23 to calculate the variance of the weighted mean:

These two formula are not equivalent (even if weight is defined as the square of the inverse standard deviation).

To muddy the waters even further, a coworker has suggested the following variance calculation:

Could someone explain why these formulae are different when they are all used to calculate the standard deviation of the weighted mean? Do they serve different purposes?