TimeRip496

- 249

- 5

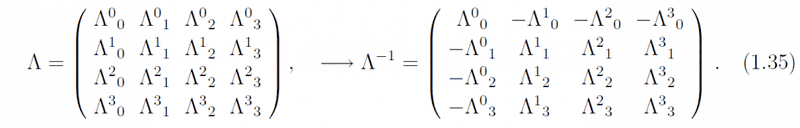

Compare this with the definition of the inverse transformation Λ-1:

Λ-1Λ = I or (Λ−1)ανΛνβ = δαβ,...(1.33)

where I is the 4×4 indentity matrix. The indexes of Λ−1 are superscript for the first and subscript for the second as before, and the matrix product is formed as usual by summing over the second index of the first matrix and the first index of the second matrix. We see that the inverse matrix of Λ is obtained by

(Λ−1)αν = Λνα,.....(1.34)

which means that one simply has to change the sign of the components for which only one of the indices is zero (namely, Λ0i and Λi0) and then transpose it:

Source: http://epx.phys.tohoku.ac.jp/~yhitoshi/particleweb/ptest-1.pdf - Page 12

I understand everything except the bolded part. How does the author know to do that? Even if he meant to inverse the matrix in the conventional sense, it doesn't seems like it.

Λ-1Λ = I or (Λ−1)ανΛνβ = δαβ,...(1.33)

where I is the 4×4 indentity matrix. The indexes of Λ−1 are superscript for the first and subscript for the second as before, and the matrix product is formed as usual by summing over the second index of the first matrix and the first index of the second matrix. We see that the inverse matrix of Λ is obtained by

(Λ−1)αν = Λνα,.....(1.34)

which means that one simply has to change the sign of the components for which only one of the indices is zero (namely, Λ0i and Λi0) and then transpose it:

Source: http://epx.phys.tohoku.ac.jp/~yhitoshi/particleweb/ptest-1.pdf - Page 12

I understand everything except the bolded part. How does the author know to do that? Even if he meant to inverse the matrix in the conventional sense, it doesn't seems like it.

Last edited by a moderator: