- #1

Tcbovb

- 2

- 0

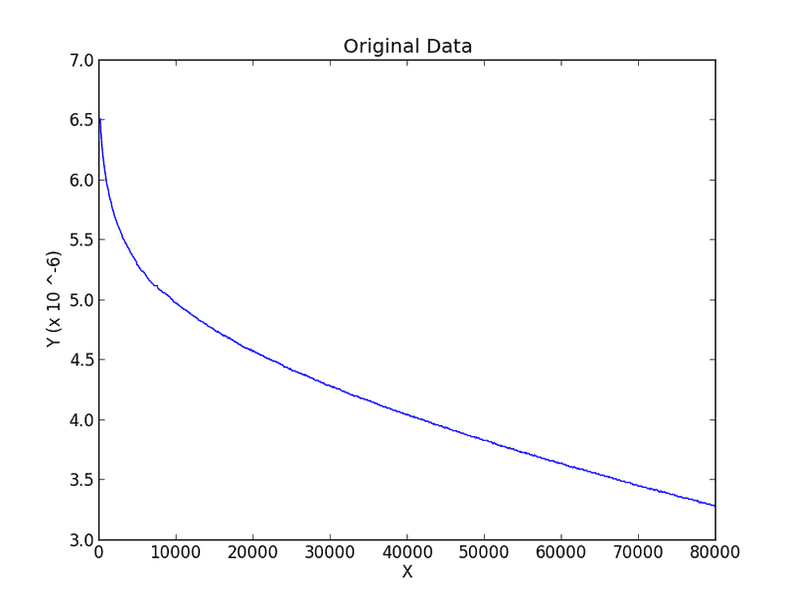

I have some experimental data (not hypothetically, this is real data), which has a downward sloping shape.

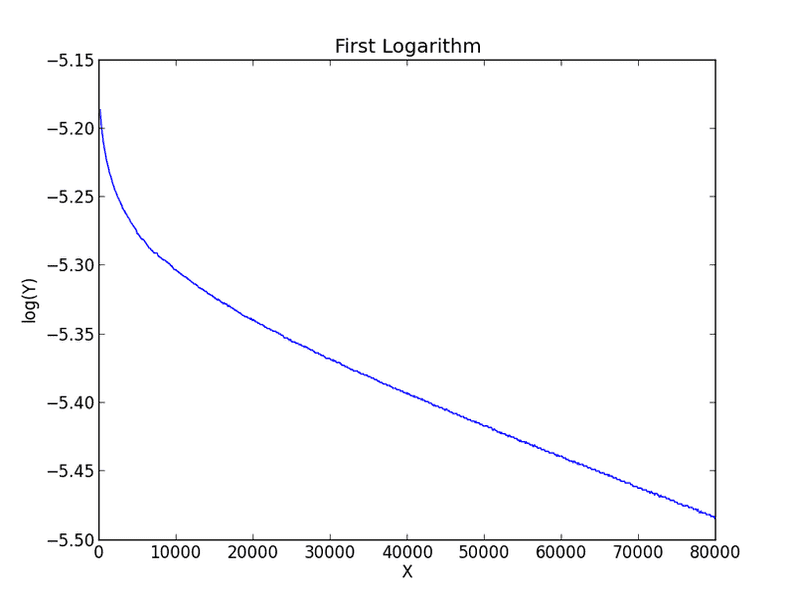

A sharp decrease initially, before settling to what looks almost like a downward linear slope. To investigate further, I decided to take the base 10 log of the y-axis data. The plot looked almost the same:

At first I though this was a mistake, but I checked over the values and the code I used to generate this and it seems fine. If the right hand part is a slowly decaying exponential, this will appear approximately linear for both linear and logarithmic axes, so maybe that is the explanation. I tried to see if I could get the curves to overlap by scaling and shifting all the values equally, but that isn't quite possible (as one would expect). However these curves do seem to be "similar" in a sense.

So I then took the log of the absolute values of the logarithmic data. Doing this over and over again produced the same familiar curve, though sometimes it was mirrored, becoming an increasing function. After 10 logs to the original data [log(log(log ... log(Y)))], this curve finally breaks down, in part because the data before (9 logs) passes through Y = 0.

What I am wondering is what is the name of a function in which when you take the log of it, the resulting function also appears to have the same shape? What functions satisfy this condition and what is the name of such similarity?

Perhaps this is just the result of something straightforward that I haven't considered yet. At the moment it just seems so weird.

Attached are a few more plots. Data points occur every 100 in the X axis. Some consecutive data points have the same Y values, hence the square corners that seem to appear in the plots.

Hopefully this is the right forum for this, I'm not really sure where "unexpected results from data analysis leading to mathematical curiosity" fits in.

Tcb

A sharp decrease initially, before settling to what looks almost like a downward linear slope. To investigate further, I decided to take the base 10 log of the y-axis data. The plot looked almost the same:

At first I though this was a mistake, but I checked over the values and the code I used to generate this and it seems fine. If the right hand part is a slowly decaying exponential, this will appear approximately linear for both linear and logarithmic axes, so maybe that is the explanation. I tried to see if I could get the curves to overlap by scaling and shifting all the values equally, but that isn't quite possible (as one would expect). However these curves do seem to be "similar" in a sense.

So I then took the log of the absolute values of the logarithmic data. Doing this over and over again produced the same familiar curve, though sometimes it was mirrored, becoming an increasing function. After 10 logs to the original data [log(log(log ... log(Y)))], this curve finally breaks down, in part because the data before (9 logs) passes through Y = 0.

What I am wondering is what is the name of a function in which when you take the log of it, the resulting function also appears to have the same shape? What functions satisfy this condition and what is the name of such similarity?

Perhaps this is just the result of something straightforward that I haven't considered yet. At the moment it just seems so weird.

Attached are a few more plots. Data points occur every 100 in the X axis. Some consecutive data points have the same Y values, hence the square corners that seem to appear in the plots.

Hopefully this is the right forum for this, I'm not really sure where "unexpected results from data analysis leading to mathematical curiosity" fits in.

Tcb