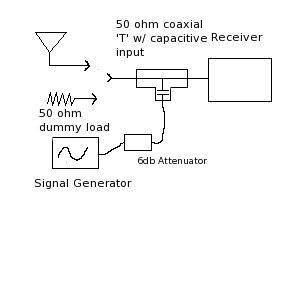

I apologize for not being clearer, but the figure of merit for the test is just the ratio of two tests. The first test is conducted with a 50 ohm dummy load connected to the T. Then a signal is applied through the capacitive coupling to the receiver, and the point where it breaks squelch is recorded. The second test is identical, except the antenna is connected in place of the dummy load. The figure of merit is the ratio of test 1 to test 2, so I would expect that accuracy of the test signal is not important, as an error in signal amplitude caused by reflections, would be the same for test 1 and test 2, so when the ratio of test 1 with test 2 is calculated, the error should divide out. After all in the results we never account for the loss is the cables, the attenuation, or insertion loss into the T, so i don't see why its necessary to have an accurate signal level. The only thing that is necessary is that the error is the same for both tests, which I believe it would be regardless of whether or not a 6bd attenuator is used in series.

Anyways, I guess my broader question is, how does the 6 db attenuator, match the capacitive input of the T to the signal generator. Its just a 50 Ohm resistive network, so it doesn't transform the impedance, although it does end up attenuating the reflected signal by 12db.

Also what's so special about a 6db attenuator as opposed to 3db or 10db for that matter?

I would really like to understand the theory behind this, maybe from a transmission line perspective. I keep being told that "the signal generator wants to see a low impedance, and the 6db attenuator makes the load appear like a 50 Ohm impedance." But no one has been able to explain the math or theory behind this to me.

Through out my career I've found that often methods are outdated, and some methods are due to misapplication of theory, or due to something that isn't technologically relevant any longer, but continues because the purpose has been long forgotten.

View attachment 90897