- #1

- 8,608

- 4,642

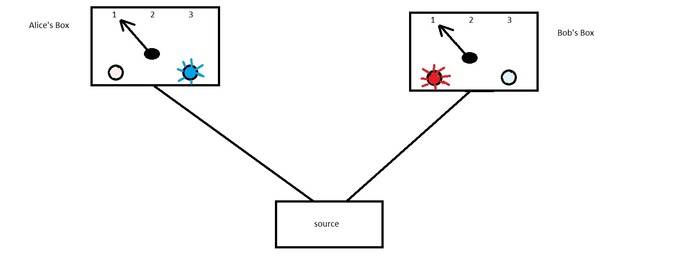

Inspired by stevendaryl's description of an EPR-like setting that doesn't refer to a particle concept, I want to discuss in this thread a generalized form of his setting that features a class of long-distance correlation experiments but abstracts from all distracting elements of reality and from all distracting elements of imagination, thus allowing the analysis to concentrate on the essentials. Pictorial, the setting,

Note that my goal in this discussion is not to prove or disprove local realism in the conventional form, but (in line with the originating thread) to investigate weirdness in quantum mechanics and its dependence on the language chosen, using this specific experimental arrangement.

Please keep this thread free from discussion of other settings for experiments related to nonlocality.

If you think the thread is too long but want to know the outcome from my perspective, you may jump directly to my main conclusions in post #187 (where I conclude that anything nonlocal is due to the intelligence of an observer) and post #197 (where I define a Lorentz invariant notion of causality sufficient to exclude superluminal signalling but far weaker than the unrealistic causality assumptions made in the derivation Bell-type theorems).

is identical to that pictured in stevendaryl's post. But the interpretation of the figure (to be discussed below) is optimized, so that one cannot speak of many things that usually obscure nonlocality discussions.stevendaryl said:

Note that my goal in this discussion is not to prove or disprove local realism in the conventional form, but (in line with the originating thread) to investigate weirdness in quantum mechanics and its dependence on the language chosen, using this specific experimental arrangement.

Please keep this thread free from discussion of other settings for experiments related to nonlocality.

If you think the thread is too long but want to know the outcome from my perspective, you may jump directly to my main conclusions in post #187 (where I conclude that anything nonlocal is due to the intelligence of an observer) and post #197 (where I define a Lorentz invariant notion of causality sufficient to exclude superluminal signalling but far weaker than the unrealistic causality assumptions made in the derivation Bell-type theorems).

Last edited: