- #1

jv07cs

- 24

- 1

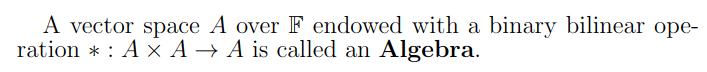

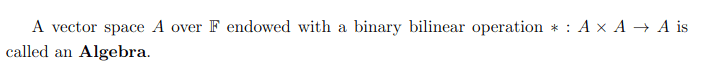

I am currently reading this book on multilinear algebra ("Álgebra Linear e Multilinear" by Rodney Biezuner, I guess it only has a portuguese edition) and the book defines an Algebra as follows:

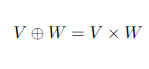

It also defines the direct sum of two vector spaces, let's say V and W, as the cartesian product V x W:

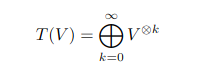

Later on, it defines the Tensor Algebra of V, let's call it T(V) as:

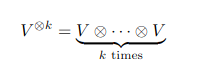

Where

If the tensor product is the binary operation of the algebra T(V), it would have to act on the n-tuples of the cartesian product of all these tensor spaces? What would the tensor product between n-tuples mean? I am quite confused about this.

It also defines the direct sum of two vector spaces, let's say V and W, as the cartesian product V x W:

Later on, it defines the Tensor Algebra of V, let's call it T(V) as:

Where

If the tensor product is the binary operation of the algebra T(V), it would have to act on the n-tuples of the cartesian product of all these tensor spaces? What would the tensor product between n-tuples mean? I am quite confused about this.