Math Amateur

Gold Member

MHB

- 3,920

- 48

I am reading Bruce N. Coopersteins book: Advanced Linear Algebra (Second Edition) ... ...

I am focused on Section 10.1 Introduction to Tensor Products ... ...

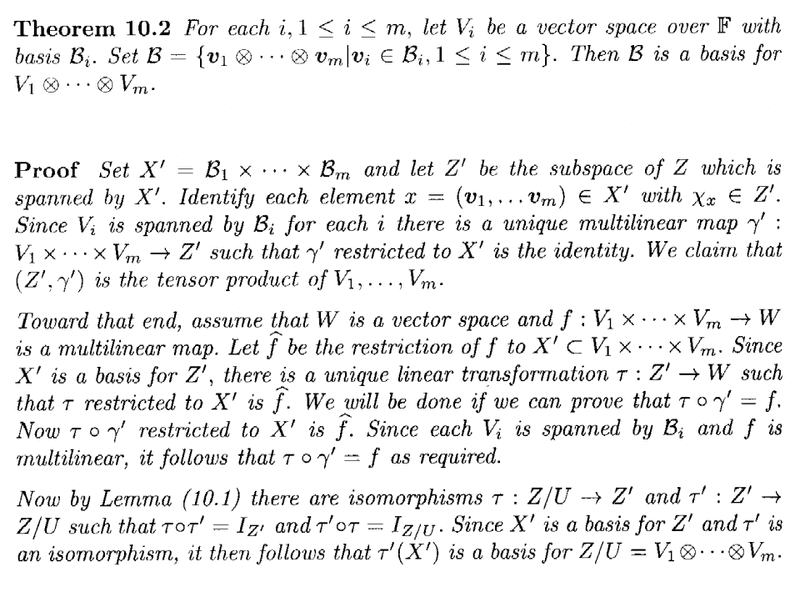

I need help with another aspect of the proof of Theorem 10.2 regarding the basis of a tensor product ... ...Theorem 10.2 reads as follows:

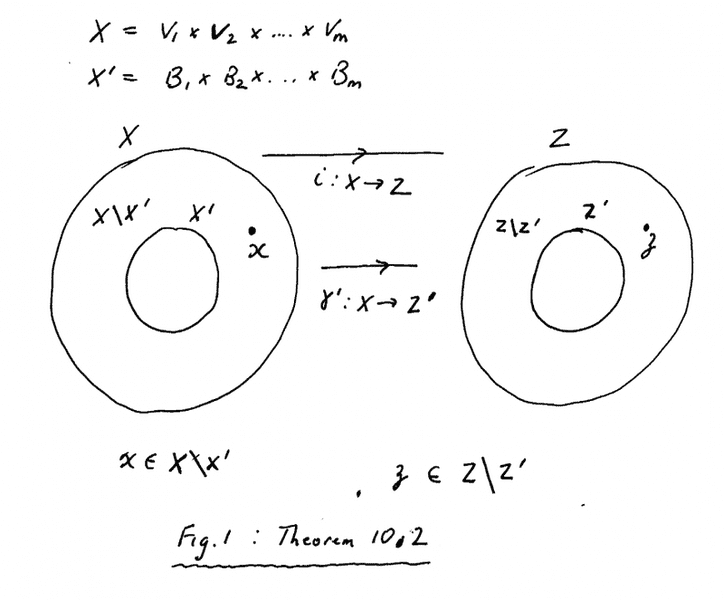

A diagram involving the mappings \iota and \gamma' is as follows:

A diagram involving the mappings \iota and \gamma' is as follows:

My questions are as follows:Question 1

How do we know that there exists a multilinear map \gamma' \ : \ X \longrightarrow Z' ?

Question 2What happens (what are the 'mechanics') under the mapping \gamma' ... ... to the elements in X\X' ( that is X - X')? How can we be sure that these elements end up in Z' and not in Z\Z'? (see Figure 1 above)

Hope someone can help ...

Peter

I am focused on Section 10.1 Introduction to Tensor Products ... ...

I need help with another aspect of the proof of Theorem 10.2 regarding the basis of a tensor product ... ...Theorem 10.2 reads as follows:

My questions are as follows:Question 1

How do we know that there exists a multilinear map \gamma' \ : \ X \longrightarrow Z' ?

Question 2What happens (what are the 'mechanics') under the mapping \gamma' ... ... to the elements in X\X' ( that is X - X')? How can we be sure that these elements end up in Z' and not in Z\Z'? (see Figure 1 above)

Hope someone can help ...

Peter