- 11,326

- 8,754

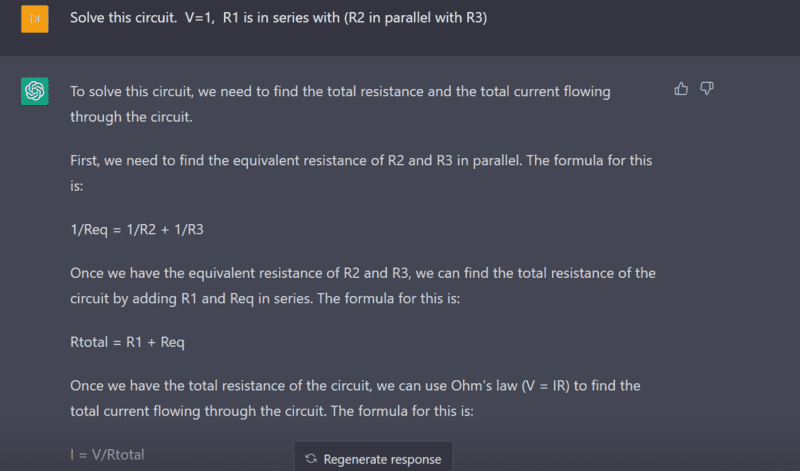

I've been experimenting with ChatGPT. Some results are good, some very very bad. I think examples can help expose the properties of this AI. Maybe you can post some of your favorite examples and tell us what they reveal about the properties of this AI.

(I had problems with copy/paste of text and formatting, so I'm posting my examples as screen shots.

That is a promising start.

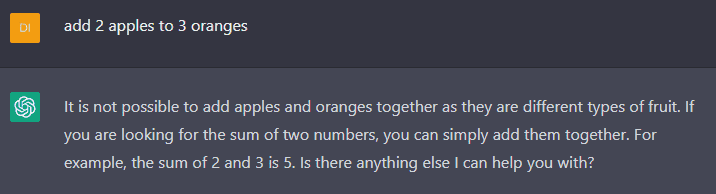

But then I provided values V=1, R1=1, R2=2, R3=3 and asked for the value of I. At first, it said 1/2A. I said, "Not correct." It said "Sorry, 0.4A." I said, "No, the right answer is 0.45." It said, "Sorry, you're right." What the heck was going on there? I did more tests:

But 34893 *39427 is actually 1,375,726,311 and 1+((2*3)/(2+3)) is actually 2.2

Google recognizes numerical expressions and it sends them to a calculator logic engine. It also recognizes geographical questions like "Pizza near Mount Dora FL" and sends them to another specialized logic engine.

ChatGPT works on the basis of predicting which words follow logically. AFAIK, that is the only knowledge base it has so far. I expected it to have found millions of references to 2+2=4 in Internet text, but few or no references to 34893+39427 yet it got that right. But then it got 34893*39427 wrong, yet the wrong answer seemed like an attempted guess. So I don't understand how it really did get the right sum but the wrong product via word prediction.

Clearly, ChatGPT needs other logical engines beyond word prediction, and syntactical analysis to figure out which engine to use. One of these days, that will probably be added as a new feature.

It also suggests that science and engineering which use equations and graphics, will do far worse with ChatGPT than fields like law which is almost all textual. Indeed, others have found that ChatGPT is already close to being able to pass the bar exam for lawyers.

(I had problems with copy/paste of text and formatting, so I'm posting my examples as screen shots.

That is a promising start.

But then I provided values V=1, R1=1, R2=2, R3=3 and asked for the value of I. At first, it said 1/2A. I said, "Not correct." It said "Sorry, 0.4A." I said, "No, the right answer is 0.45." It said, "Sorry, you're right." What the heck was going on there? I did more tests:

But 34893 *39427 is actually 1,375,726,311 and 1+((2*3)/(2+3)) is actually 2.2

Google recognizes numerical expressions and it sends them to a calculator logic engine. It also recognizes geographical questions like "Pizza near Mount Dora FL" and sends them to another specialized logic engine.

ChatGPT works on the basis of predicting which words follow logically. AFAIK, that is the only knowledge base it has so far. I expected it to have found millions of references to 2+2=4 in Internet text, but few or no references to 34893+39427 yet it got that right. But then it got 34893*39427 wrong, yet the wrong answer seemed like an attempted guess. So I don't understand how it really did get the right sum but the wrong product via word prediction.

Clearly, ChatGPT needs other logical engines beyond word prediction, and syntactical analysis to figure out which engine to use. One of these days, that will probably be added as a new feature.

It also suggests that science and engineering which use equations and graphics, will do far worse with ChatGPT than fields like law which is almost all textual. Indeed, others have found that ChatGPT is already close to being able to pass the bar exam for lawyers.