Math Amateur

Gold Member

MHB

- 3,920

- 48

I am reading Tensor Calculus for Physics by Dwight E. Neuenschwander and am having difficulties in confidently interpreting his use of Dirac Notation in Section 1.9 ...

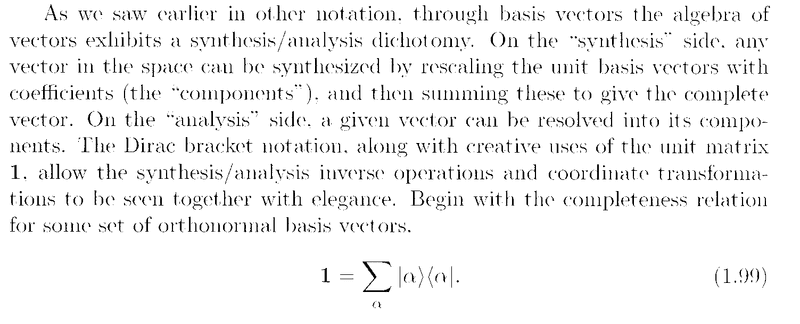

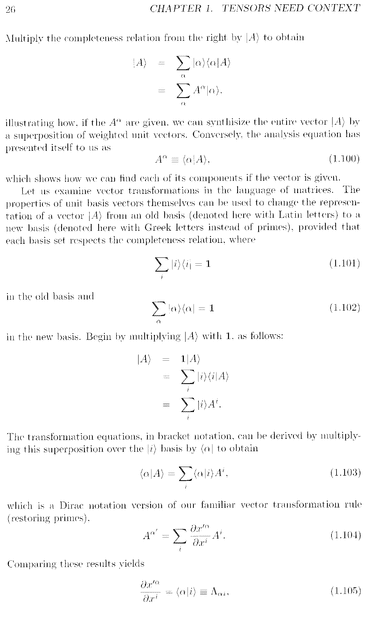

in Section 1.9 we read the following:

I need some help to confidently interpret and proceed with Neuenschwander's notation in the text above ...Indeed I am not sure how to interpret Neuenschwander when he writes:

## 1 = \sum_{ \alpha } \vert \alpha \rangle \langle \alpha \vert ##... ... ... (1.99)

Am I proceeding validly or correctly when i assume that

## | \alpha \rangle =

\begin{bmatrix}

\alpha^1 \\

\alpha^2 \\

\alpha^3

\end{bmatrix}

##

... and

## \langle \alpha | = \begin{bmatrix}

\alpha^1 & \alpha^2 & \alpha^3

\end{bmatrix}

##and when he writes##\vert A \rangle = \sum_{ \alpha } \vert \alpha \rangle \langle \alpha \vert A \rangle ##

... can I assume that it is OK to take## \vert A \rangle = \begin{bmatrix} A^1 \\ A^2 \\ A^3 \end{bmatrix} ##

so that## \langle \alpha \vert A \rangle =

\begin{bmatrix}

\alpha^1 & \alpha^2 & \alpha^3

\end{bmatrix}

\begin{bmatrix}

A^1 \\

A^2 \\

A^3

\end{bmatrix}

## ... and so on ...Am i proceeding correctly ?Hope someone can help ...

Peter

in Section 1.9 we read the following:

I need some help to confidently interpret and proceed with Neuenschwander's notation in the text above ...Indeed I am not sure how to interpret Neuenschwander when he writes:

## 1 = \sum_{ \alpha } \vert \alpha \rangle \langle \alpha \vert ##... ... ... (1.99)

Am I proceeding validly or correctly when i assume that

## | \alpha \rangle =

\begin{bmatrix}

\alpha^1 \\

\alpha^2 \\

\alpha^3

\end{bmatrix}

##

... and

## \langle \alpha | = \begin{bmatrix}

\alpha^1 & \alpha^2 & \alpha^3

\end{bmatrix}

##and when he writes##\vert A \rangle = \sum_{ \alpha } \vert \alpha \rangle \langle \alpha \vert A \rangle ##

... can I assume that it is OK to take## \vert A \rangle = \begin{bmatrix} A^1 \\ A^2 \\ A^3 \end{bmatrix} ##

so that## \langle \alpha \vert A \rangle =

\begin{bmatrix}

\alpha^1 & \alpha^2 & \alpha^3

\end{bmatrix}

\begin{bmatrix}

A^1 \\

A^2 \\

A^3

\end{bmatrix}

## ... and so on ...Am i proceeding correctly ?Hope someone can help ...

Peter

Attachments

Last edited: