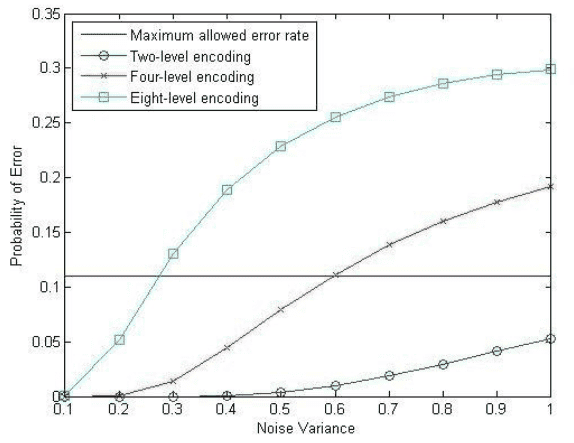

Eq. 4 is the distribution of the received signal ##y##, given that signal ##x_i## was transmitted, where ##x_i## can be any of the ##M## available input signals. So, what we want to find is, for a given received signal, what signal was transmitted, right? To find this, we need to find the signal that has the maximum conditional probability ##p(x_i/y)=\frac{p(y/x_i)\,p(x_i)}{p(y)}## using Bayes rule. But since all signals have the same probabilities ##p(x_i)##, and ##p(y)## is common to all possible transmitted signals, the problem is reduced to finding the signal with the maximum ##p(y/x_i)##.

The received signal is ##y=x_i+n##, where ##n## is an additive white Gaussian noise with zero mean and variance ##\sigma_n^2##. So, for a given ##x_i##, ##y## becomes a Gaussian random variable with mean ##x_i## and a variance ##\sigma_n^2##. Eq. 4 describes just this. How to find the transmitted signal that maximizes ##p(y/x_i)##? Simple, find the signal point that is closest to ##y##. Now, how to quantify the error probability, you need to follow the steps I showed.

So, to answer your question: Eq. 4 is the distribution of the received signal ##y##, given ##x_i## was transmitted. Which means that, if you transmit ##x_i## over an AWGN channel a large number of times, the observed received signal at the receiver will follow a Gaussian distribution centered around ##x_i##, with a variance equals to the noise variance. ##Q\left[\frac{1}{\sqrt{\sigma_n^2}}\right]## is that quantity that measures the probability of error. That is, if we transmit ##x_i## a large number of times, how many times from the total times ##y## won't be closest to ##x_i##, which results in erroneous decision.

Hope this helps.