- #1

btb4198

- 572

- 10

I am coding Sobel Edges detection in C#.

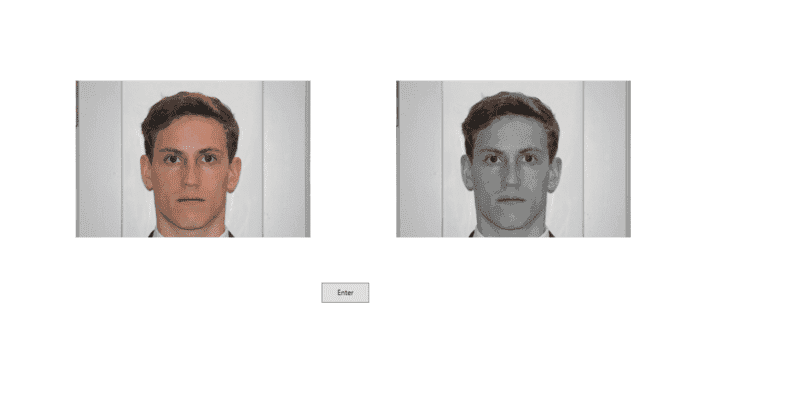

I have a method that converts my image to grayscale.

it adds the R G and B values and divides by 3. and replaces the R G and B value out with that same number. That seems to be working fine.

Then I added a gaussian blur to it by using this :

_kernel = new int [9]{1,2,1,2,4,2,1,2,1};

and doing a convolution to my Image that is in grayscale:

I guess that is working ...

At this point I do two more convolutions. One for the x-axis and one for the y axis. I perform both separately on the same image that now has a grayscale and gaussian blur applied to it.

I do a convolution for X using this :

_kernel = new int[9] { -1, 0, 1, -2, 0, 2, -1, 0, 1 };

with my Image and store that result in a new array called resultX[,]

and then I do another convolution for Y

using this:

_kernel = new int[9] { -1, -2, -1, 0, 0, 0, 1, 2, 1 };

and store that result in another array resultY[,]

Mentor note: added code tags and fixed the problem of disappearing array index that caused subsequent text to be rendered in italics.

Then I do this:

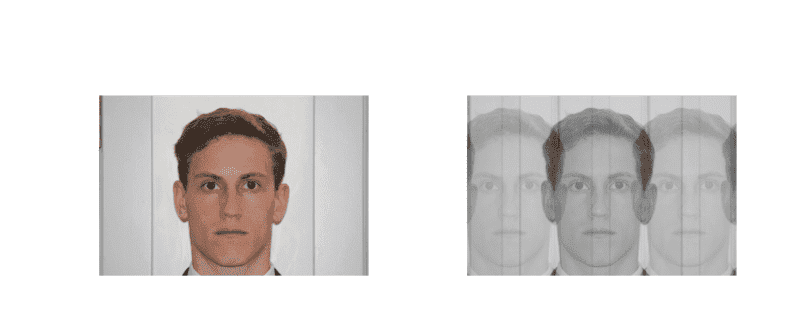

but My image is black :

any Idea of what I am doing wrong?

I checked my convolution method and it is work just like how this guy says it should:

[/i]

I have a method that converts my image to grayscale.

it adds the R G and B values and divides by 3. and replaces the R G and B value out with that same number. That seems to be working fine.

Then I added a gaussian blur to it by using this :

_kernel = new int [9]{1,2,1,2,4,2,1,2,1};

and doing a convolution to my Image that is in grayscale:

I guess that is working ...

At this point I do two more convolutions. One for the x-axis and one for the y axis. I perform both separately on the same image that now has a grayscale and gaussian blur applied to it.

I do a convolution for X using this :

_kernel = new int[9] { -1, 0, 1, -2, 0, 2, -1, 0, 1 };

with my Image and store that result in a new array called resultX[,]

and then I do another convolution for Y

using this:

_kernel = new int[9] { -1, -2, -1, 0, 0, 0, 1, 2, 1 };

and store that result in another array resultY[,]

Mentor note: added code tags and fixed the problem of disappearing array index that caused subsequent text to be rendered in italics.

Then I do this:

C:

int i = 0;

for (int x = 0; x < Row; x++)

{

for (int y = 0; y < Column; y++)

{

byte value = (byte) Math.Sqrt(Math.Pow(resultX[x, y], 2) + Math.Pow(resultY[x, y], 2));

result[ i] = value;

result[ i + 1] = value;

result[ i + 2] = value;

result[ i + 3] = 0;

Angle[x, y] = Math.Atan(resultY[x, y] / resultX[x, y]);

i += BitDepth;

}

}

return result;any Idea of what I am doing wrong?

I checked my convolution method and it is work just like how this guy says it should:

[/i]

Last edited by a moderator: